Key Takeaways

- Conversation design is critical infrastructure, because users will quickly abandon any AI tool they don’t see as trustworthy.

- Since natural language is messy and unpredictable, agents must be designed to “fail gracefully” by admitting limits and fixing misunderstandings without breaking the flow.

- The conversation is replacing static menus as the primary user interface, allowing AI to build and rebuild visual layouts in real time based entirely on what the user asks for.

Bad conversation design is easy to spot: A passenger calls an airline to rebook a flight and repeats increasingly impatient requests to a robotic voice, finally resorting to hollering “Associate! Human!” into the void.

Good conversation design, however — in which an AI agent understands that a parent is trying to book a doctor’s appointment for their child, has access to the desired doctor’s schedule, secures a time in two weeks, but also offers a sooner appointment with a different practitioner — succeeds in subtle ways.

When it works, good conversation design feels invisible, but it can be the difference between AI that people actually use and AI that frustrates and ultimately gets abandoned. The future of customer interaction depends on companies getting AI conversations right. Unfortunately, there are many ways to get them wrong.

AI can understand context, take actions, and handle real complexity in a simple back-and-forth conversation with a user. But while talking to AI is “easier” than using a system that forces humans into a series of forms and menus and fields and clicks, a natural language interface means treating conversation design not as a user interface layer on top of AI but as a core design challenge.

Conversation design is critical infrastructure for any company deploying AI agents that users trust.

When AI can hold genuinely human-seeming conversations, every linguistic misstep or failed handoff feels like a broken promise in a way that a frustrated interaction with a phone tree doesn’t.

“When conversation design is done well, people complete tasks faster, adoption goes up, support costs go down, and the AI delivers the efficiency and productivity gains leaders were promised,” said Liz Trudeau, SVP of User Experience at Salesforce. That success shows up in KPIs like task completion, sustained usage and adoption, deflection of support volume, and time to resolution. When conversation design is bad, the interactions are frustrating. “Users lose trust; they stop using the agent,” said Trudeau. “The business ends up paying for AI that looks impressive in a demo but delivers no meaningful return.”

The Grammar of Trust

When customers interact with AI using natural language, they bring certain expectations. “By default, they interpret the system’s responses the same way they would interpret a human’s,” said Trudeau.

Conversation design — part linguistics, part psychology, part user experience — is the discipline that tackles this challenge. Designers create natural back-and-forth rhythms, ensure consistent logic users can rely on, clarify boundaries (a healthcare agent helps with insurance coverage, not diagnosis), and craft distinct personalities. A financial services bot that’s too casual with fraud cases seems incompetent. A healthcare agent that’s overly formal feels cold.

“Conversation design shapes how an agent reasons, how it aligns with workflows, how it handles uncertainty,” said Yvonne Gando, Senior Director of User Experience at Salesforce. “It has more in common with product architecture than copy editing.” In other words, conversation design is critical infrastructure for any company deploying AI agents that users trust.

To build this infrastructure (and to build that trust), conversation designers focus on four core principles:

First is turn-taking — the natural rhythm of when to speak, listen, or pause. Second, predictable responses that follow consistent logic and align with what users expect. Third, a defined role and goal — the AI needs to know what the user is trying to accomplish and what part it should play. Finally, persona — a recognizable voice and pattern of behavior that transforms faceless systems into something users can understand and rely on.

These principles show up in subtle ways. Consider turn-taking: If the user and the AI don’t go back and forth enough, the interaction feels abrupt; if they have to go back and forth too much, users lose patience. Agents that over-confirm every detail quickly irritate users who just want to get things done.

“The first thing is to expect that conversations don’t go as planned,” said Gando. Unlike traditional software where errors are bugs to fix, conversations are inherently unpredictable. Users phrase things unexpectedly. They change topics midstream. They assume context the AI doesn’t have.

So modern conversation designers build in what they call “repair strategies.” When an agent hits a knowledge gap, it acknowledges its limitations and hands off gracefully to a human. When business policies require human approval, the system should seamlessly shift channels. The goal isn’t preventing problems but handling them when they occur.

When these elements fail, trust breaks. It’s hard to have confidence in an agent that forgets what you told it three messages ago or that doesn’t do anything to show you that it’s working on a problem (or that is too obsequious). Those failures remind users that the conversation isn’t “real,” which means that testing and constant iteration is a key part of the development process. One partner told Gando that building AI agents is 80% testing, with designers now constantly refining based on real user interactions.

“Every single micromoment affects how the user perceives the interaction,” Gando said. The goal isn’t perfection. It’s building systems that fail gracefully, learn continuously, and know their limits. “We need to teach machines how to speak human.”

The Architecture of Maybe

If interactions with computers are now more probabilistic than deterministic (we don’t always know how the machine will respond), then the visual layer has to change, too — a shift that is driving a fundamental rethinking of how enterprise design systems can work. Salesforce’s answer is Salesforce Lightning Design System 2. Instead of rigid, prebuilt components, SLDS 2 provides what designers call “primitives,” or basic, unstyled blocks of UI code that AI can assemble based on context. It’s a design system built for flexibility, where components can adapt mid-conversation rather than staying locked in place.

Here’s how it works: A user says, “Show me sales trends for the Midwest,” and the AI builds a dashboard on the fly — choosing visualizations, arranging components, making visual decisions that used to require human designers. There’s no designer creating pixel-perfect mock-ups, no developer implementing approved layouts, no design review, no consistency check. The conversation drives what appears on screen, and traditional design systems weren’t built for this kind of real-time generation.

“Traditional design systems were like using Legos to build something and then gluing them all together,” said Adam Doti, VP and Principal Architect for Design Systems at Salesforce. “Now we need those Legos to stay separate, so when the LLM goes to assemble experiences on the fly, you have higher-fidelity output, not some chunky, pixelated thing that’s locked in place.”

The shift from deterministic to probabilistic design isn’t just technical. It’s philosophical, and design teams are now wrestling with how much grounding and what guidelines to give AI. Too little, and you get what Doti calls his “dystopian future,” where AI optimizes everything down to the fastest-performing interfaces. “We’d end up with the same visual design, like purple gradients everywhere and the same boring brand because AIs would just be reinforcing each other’s default styling over and over and over,” he said.

Product managers are already seeing this convergence. “They come to me saying everything looks the same,” Doti said. “The padding is all the same, the font treatments are the same. Until you ground the AI in your specific design system and visual language backed by a styling API, it defaults to this generic middle.”

The solution requires a new design system architecture for an agentic future, consisting of design knowledge and grounding, as well as design system expert agents to support what Doti’s team calls “guardrails with flexibility.” Accessibility standards, brand requirements, and experience guidelines remain inviolate, and AI works within those constraints.

This architectural shift enables conversational interfaces to actually deliver on their promise. Developers are already using vibe coding to build entire applications through conversation — telling AI to “create a dashboard that tracks customer engagement across regions” or “build me a form that collects warranty claims.”

The AI generates the interface in real time, pulling from those separated Lego blocks to assemble something that matches the request. When a user later says, “Drop that column and show me trends instead,” the system doesn’t just return an error or static alternative. It reconstructs the interface on the fly, sliding away the column, adding a trend visualization, and maintaining the flow. Grounded in Agentic Enterprise-grade user experience patterns, guidelines and best practices, the conversation drives the interface, not the other way around.

The Slack Laboratory

Some of what Salesforce is learning about communication design comes from Slack, which processes billions of workplace messages daily, a massive experiment in how humans actually communicate at work. “My team is looking for those best moments in Slack that need to be brought into Salesforce and those moments in Salesforce that need to be brought into Slack,” Doti said. Now the platform faces a new challenge: integrating AI agents into these conversations smoothly and transparently.

An agent’s persona varies depending on its role. Slackbot can be cheerful and witty; the Salesforce Employee Agent is more straightforward.

“One of our values as designers is building trust,” said Miguel Fernandez, VP of Design at Slack. “You have to make sure that you are helping people understand what is an actual human interaction versus what is an interaction with a computer.”

Again, the details are subtle but essential. Humans get profile pictures and pronouns. Agents get app badges and disclosure labels. The typing indicator shows when someone — or something — is working on a response. Status updates show what the agent is doing — “searching documents,” “gathering context” — without interrupting flow.

“A lot of trust is built by that type of transparency,” Fernandez said. “Making the actions visible helps people understand what’s happening.”

When Slack first introduced agents into channels, for example, latency became an immediate problem. In synchronous workplace conversations, responses need to be “snappy.” But agents need time to gather context. They can be engineered to work faster, yes, but it’s also important to show the work happening, offering interim updates explaining that the agent is searching documents, gathering context, checking with other systems, and so forth. It’s easier to be patient when you know more about what’s happening behind the scenes.

An agent’s persona varies depending on its role. Slackbot can be cheerful and witty — that’s Slack’s brand. The Salesforce Employee Agent, which also lives in Slack, helps people find information (How do I connect to a new printer? Where do I sign up for commuter benefits?) and is more straightforward. “Each agent needs a voice and tone that reflects who it represents and who it’s talking to,” Fernandez said. “This context can determine its personality.”

The “multiplayer” nature of Slack is one its key advantages, but that also brings complexity. Unlike ChatGPT, where interactions are 1-to-1, Slack conversations involve multiple humans and potentially multiple agents collaborating simultaneously.

“I could be talking with you, with many of my peers, and with an agent at the same time, and we can all be collaborating,” he said. “It becomes like one more actor in a collaboration.”

There are still some fundamental questions to be answered. What happens when agents talk to each other in view of humans? Should agents use emoji reactions? Voice interactions present another unsolved challenge — how does the dynamic change when people speak to agents instead of typing?

“We’re not designing agents to impersonate humans,” Fernandez said. “We’re designing them to be authentic representatives of their function.”

The Bottom Line

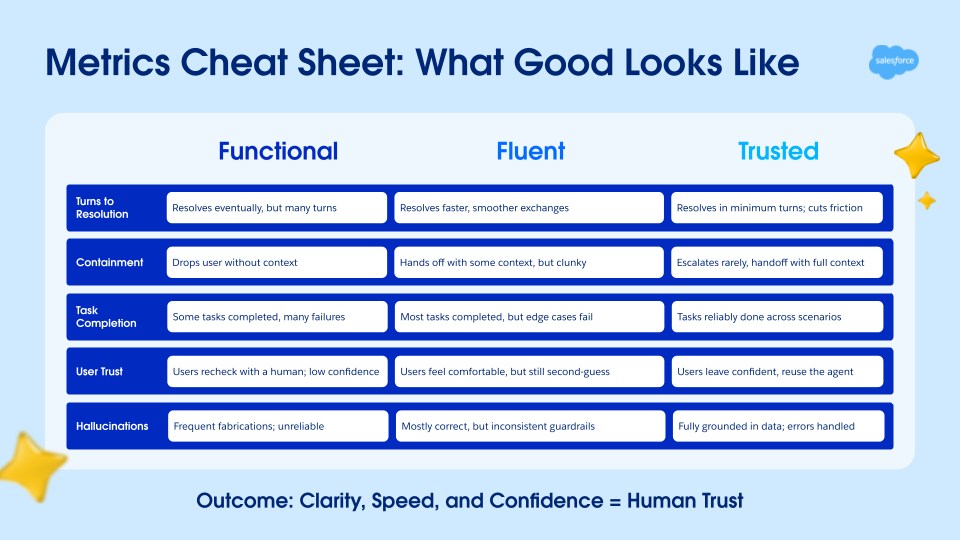

Companies that get this right treat conversation design as core infrastructure from the start. They establish clear ownership and invest in shared evaluation systems before building anything. For example, teams might track containment rate (how often AI handles requests independently), turns to resolution (the number of messages required to accomplish a task), and repair frequency. Companies that struggle fragment responsibilities across teams and build impressive demos without foundational conversational design principles in place.

The difference shows up everywhere, says Gando — in customer satisfaction scores, in support costs, in adoption rates. When it works, teams use the same language when discussing agent quality. New AI experiences ship faster because they’re not reinventing tone, flows, or safety scaffolding each time. Customers experience interactions that feel crisp and accurate rather than hesitant — or unduly confident.

The companies that master conversation design won’t just have better AI. They’ll have transformed the fundamental relationship between their business and their customers. Every interaction becomes a moment to deepen the relationship rather than leaving the customer barking at a recalcitrant machine.

Mastery in this arena will be a moving target. As AI grows more capable, as conversations become more complex, as multiple agents learn to work together, the principles of good conversation design will continue to evolve. What worked for chatbots won’t work for agents that can take actions. What works today might not work when agents are talking to each other as much as they are to humans.

“This space is new, and we are learning more every day,” said Gando. “What matters is that we treat conversation design as core and foundational to how we build AI experiences.”

Go deeper:

- Unlocking the power of conversation in Slack

- What a broken conversation with AI can tell you (and how to fix it)