A Surprising Collaboration: Competitors Work Together to Solve Ethical Tech Challenges

Sammy Spiegel

Quick Take: In 2018, as the world put a heightened focus on the ethical use of emerging technology, Salesforce partnered with the World Economic Forum to start the Responsible Use of Technology Project, which brings together stakeholders from industry, non-profit organizations, governments, and academia to solve today’s most pressing ethical and responsible innovation challenges — and act as a powerful force for good.

On a cloudy Friday morning in November 2018, Brian Green, Director of Technology Ethics at the Markkula Center for Applied Ethics at Santa Clara University sat outside ‘The Centre for the Fourth Industrial Revolution,’ a cream-colored building in San Francisco’s Presidio. He didn’t know what to expect.

To the left stood the Golden Gate bridge. To the right stood a meeting of 40+ leaders across industry, non-profit, governments, and academia, grappling with their role in ethical tech.

At the time, tech ethics dominated the news cycle. Yet, while many — including Green — acknowledged there was a problem, no one knew where to start.

Salesforce was among the organizations contemplating what role it should play. Looking to drive its internal conversations forward, Salesforce went to long-time partner, the World Economic Forum, to collaborate on hosting an inaugural convening for technology and ethics. They came to the right place, says Kay Firth-Butterfield, now Head of Artificial Intelligence and a member of the Executive Committee at the World Economic Forum (WEF).

“This is what we do,” said Firth-Butterfield. “WEF is a global organization for public-private cooperation that convenes people to have conversations and think about the world, to solve problems that impact everyone.”

This is what we do. WEF is a global organization for public-private cooperation that convenes people to have conversations and think about the world, to solve problems that impact everyone.

Kay Firth-Butterfield, Head of Artificial Intelligence, Executive Committee Member, World Economic Forum

A day unlike any other

Green walked inside, and to his delight, entered a lively meeting room, full of people taking this problem seriously and eager to make things better. Four hours, three working groups, and hundreds of post-its later, the leaders were so inspired, they didn’t want to break for lunch.

The convening planted a seed for what would become a fruitful movement — 15 stakeholders, some with competing agendas, all focused on solving tech’s biggest challenges impacting their businesses, customers, constituents, and students.

The World Economic Forum and Salesforce, alongside companies like Microsoft, Deloitte, and Workday, as well as institutions like the United Nations, Business for Social Responsibility, the Office of the High Council on Human Rights, the Omidyar Network, and The Markkula Center for Applied Ethics at Santa Clara University, created something concrete: the Responsible Use of Technology Project.

Seeking to establish a multi-stakeholder group that could help propel the industry forward, Salesforce provided that sustained investment to get the effort off the ground — two full-time employees paid by Salesforce that would become full-time leaders of the Responsible Use of Technology Project. These secondees would engage affiliated organizations and help shape the strategic direction and deliverables of the project.

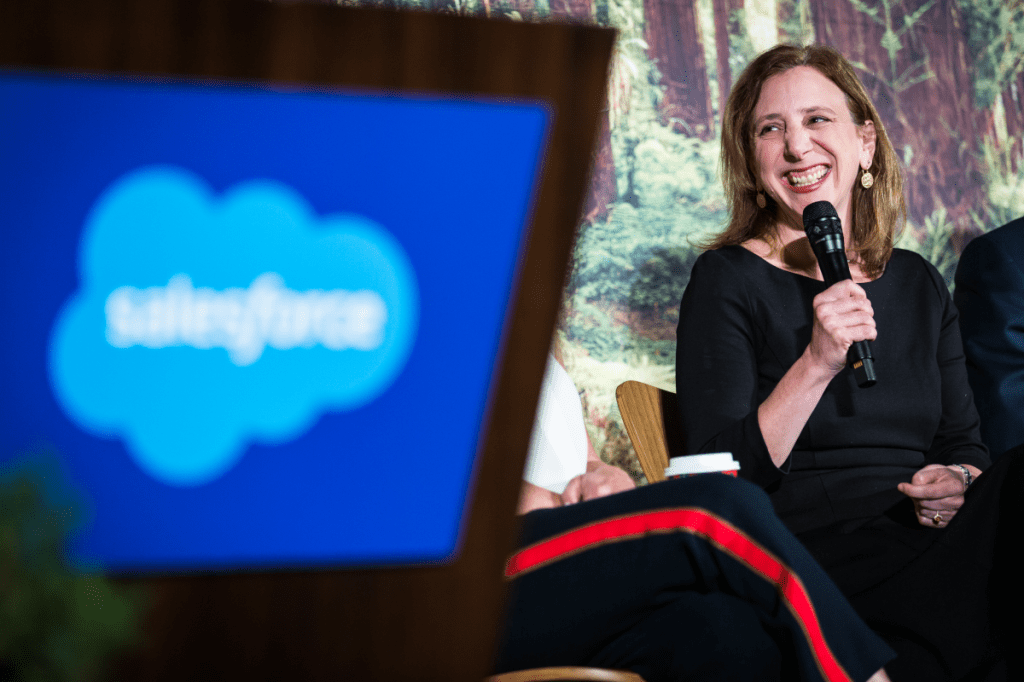

Salesforce also committed several staff to sit on the project’s advisory boards including Salesforce’s Chief Ethical and Humane Use Officer, Paula Goldman to sit on the project steering committee.

Joining forces for good

Microsoft, another prominent force in the responsible AI and tech space, was also one of the early stakeholders of the project.

“The formation of the Responsible Use of Technology Project was very timely, as companies all over the world were looking to put principle into practice,” said Steve Sweetman, Principal Program Manager, Azure AI, Microsoft.

This kind of collaboration from wide swaths of the industry was unique, partially because they were groups and professionals who knew each other, but had previously worked independently on the issues of responsible innovation in tech, given the competitive landscape. The format and the support of WEF, however, created a space that everyone felt like they could work together for a greater good.

Sweetman continued, “The World Economic Forum has fostered an open and collaborative environment where we can learn together, and share those learnings globally.”

Even more unique is the transparency and level of engagement each group — including competitors — bring to the discussions. Participants say it is the forum’s culture that enables this kind of collaboration.

Steven Mills, Chief AI Ethics Officer at Boston Consulting Group explained, “I’ve never heard anything outside the forum repeated. There is this amazing culture within the group that’s been fostered to engage people and make it a safe place for folks. That’s really the power of it, in many ways.”

I’ve never heard anything outside the forum repeated. There is this amazing culture within the group that’s been fostered to engage people and make it a safe place for folks.

Steve Mills, Chief AI Ethics Officer, Boston Consulting Group

Turning good intentions into concrete action

Daniel Lim, Senior Director of Experience Design at Salesforce seconded to the WEF, took the lead on the Responsible Use of Technology Project in its second year. His first assignment after inheriting the unique effort was clear: keep things moving and build upon what’s been developed.

“My immediate challenge was how to keep the momentum of the group and cultivate even more collaboration and concrete action,” said Lim.

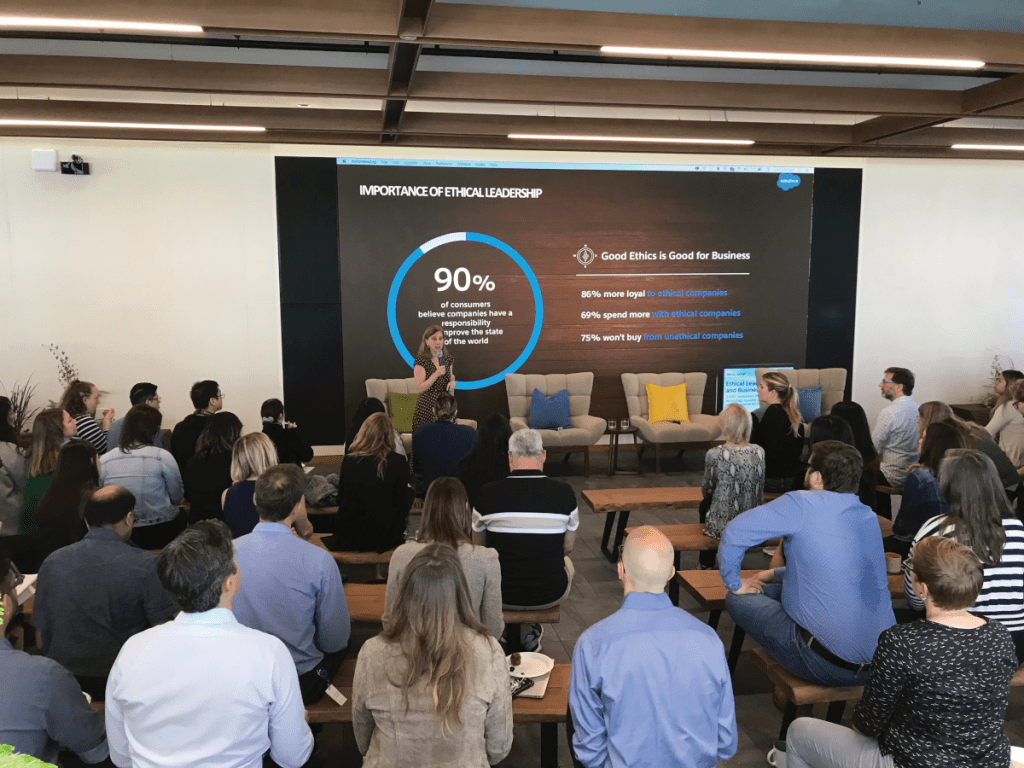

The initial working group felt that many organizations had established ethical tech principles, but hadn’t cracked the code on operationalizing them in their work. It was this challenge that the WEF group felt uniquely positioned to help solve.

Three work streams were outlined as priority areas to do so — education and training, organizational design, and product lifecycle.

Workstreams in action: Delivering inspiration for ethics in technology

The group first prioritized finding ways to help organizations train their workforce on spotting ethical challenges.

“Everyone wants to do the right thing, but they don’t always know where to start. We focus on helping teams think about the unintended consequences of the technologies they’re building and come up with creative ways to mitigate those risks,” says Paula Goldman, Chief Ethical and Humane Use Officer at Salesforce, and steering committee partner on the project.

Everyone wants to do the right thing, but they don’t always know where to start. We focus on helping teams think about the unintended consequences of the technologies they’re building and come up with creative ways to mitigate those risks.

Paula Goldman, Chief Ethical and Humane Use Officer, Salesforce

Salesforce found a natural way to help. Trailhead, the company’s free online learning platform, could be used both internally for Salesforce employees to be trained on spotting bias in its technology; but could also be used externally to help other employers and employees skill up on the topic. Responsible Use of AI aims to educate and remove bias from data and algorithms, and demonstrate how to incorporate ethics into a person’s work. The training has now been taken by over 21,000 people worldwide.

Another key workstream was focusing on change management — key to an organization’s adoption of ethical technology practices. To that end, the group collaborated on a first-of-its-kind framework centered on behavioral economics principles called Ethics by Design, which sought to outline how businesses can structure their organizations and change company culture, and promote more ethical behavior with technology in their organizations.

Said Lim, “We started writing these case studies to highlight organizations that were doing something substantive around responsible tech and responsible innovation. Deep dives, like how they govern themselves, what culture they have inside their organization, and what products they have created.”

One prominent company example was a case study on Microsoft, sharing four key learnings that the organization found as they designed responsible tech, starting with culture change.

The last workstream was around building ethics into a company’s products and services. This includes incorporating steps in the product development process to mitigate potential risks and ensure ethical guardrails are embedded in products and features .

This tool came in handy during the pandemic. Salesforce had launched a product, Vaccine Cloud, aimed at helping public health agencies distribute vaccines, a noble effort, though not without ethical risks.

“If sign-ups can only be performed online or via smartphone, and are only available in English, there is a serious risk of marginalizing those without access to technology, those who are less digitally literate, non-English speaking populations, and those who are only able to use lower-tech options,” said Rob Katz, Senior Director, Ethical & Humane Use at Salesforce in a recent blog post about the project.

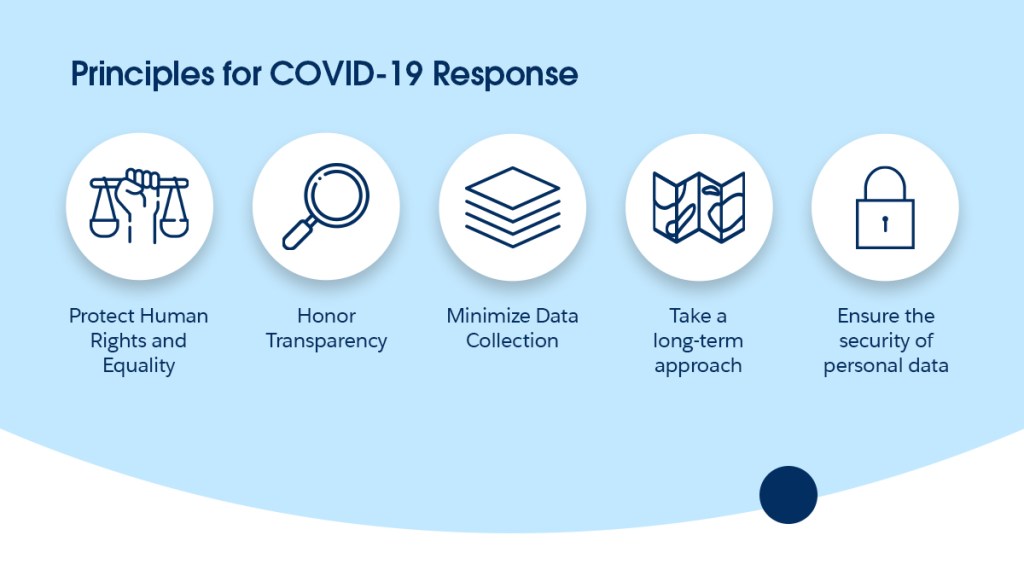

Following the launch, Salesforce presented its learnings from the development process to the WEF project community — including its Ethics By Design approach with Work.com, the tools used for risk assessment and educating customers, and the principles they developed for responding to COVID-19.

Microsoft also hosted a workshop and presented its approach to responsible product innovation.

“We don’t see this competitively,” said Kathy Baxter, Principal Architect, Ethical AI Practice, Salesforce. “There is a spirit of feedback among those of us in this field and WEF provides a platform for us to all share our learnings. We all want a better society and we know this type of work is for the greater good.”

We don’t see this competitively. There is a spirit of feedback among those of us in this field and WEF provides a platform for us to all share our learnings. We all want a better society and we know this type of work is for the greater good.

Kathy Baxter, Chief AI Ethics Officer, Salesforce

These papers, case studies and workshops aren’t just exploration, either, they aim to provide the global agenda for responsible technology and a blueprint for other organizations without the larger resources of companies like Salesforce or Microsoft.

“The idea is that companies of all sizes and in all industries could potentially replicate and try to do some of this themselves and use what we have created and shared globally,” Lim said. “Our ultimate goal is to create a global community for organizations to learn how to be more ethical around technology.”

Will Griffin, Chief Ethics Officer at enterprise AI startup HyperGiant agrees.

“One of the primary benefits of being involved with large, industry-leading companies is that it helps us assess whether our process for embedding ethics into our use case workflows will actually scale as we grow,” he said. “As an early stage AI company, being part of the WEF community is tremendously valuable to us.”

What’s next for responsible innovation

The Responsible Use of Technology Project continues to work toward its goals, knowing that the work is far from finished.

The WEF project helped define the scope of ethical technology to include the responsible design, development, deployment and use of technology, says Lim, but he predicts the scope will continue to expand.

“With the rise of ESG (Environment, Social, and Governance), there is a need to expand our scope to include responsible investment in technology as well.”

The group is looking for additional ways to operationalize ethics in tech and begin changing the way that companies are innovating for the future.

Says Lim, “We’re excited about the prospect of building a responsible technology maturity model — a concrete way to translate commonalities and lessons learned from our organizations’ journeys into a framework for other organizations to adopt in their own ethical journeys.”

We’re excited about the prospect of building a responsible technology maturity model — a concrete way to translate commonalities and lessons learned from our organizations’ journeys into a framework for other organizations to adopt in their own ethical journeys.

Daniel Lim, Fellow, Artificial Intelligence & Machine Learning, Centre for the Fourth Industrial Revolution

As technology continues to evolve and companies push products further, the WEF team hopes its work will provide companies, and end users, the resources and knowledge needed to ensure technology is built ethically and deployed responsibly.

Get involved in the Responsible Use of Technology project here. Read about the group’s three predictions for the future of responsible technology here.