We’re living in an era of extraordinary AI advancement. Generative models have evolved from novelties to necessities almost overnight — capable of drafting complex briefs, summarizing nuanced customer interactions, and reasoning across text, images, and code. But while large language models (LLMs) can sometimes deliver high-quality outputs, these are not always optimized for the best business outcomes, and worse, they don’t do so consistently. That inconsistency makes them unreliable to business leaders looking to deploy AI agents within the enterprise.

At the heart of the issue is hallucination: when a model confidently generates false, misleading, or entirely fabricated information. In consumer settings, these mistakes can be meme-worthy. In the enterprise, they raise red flags. According to The New York Times, newer AI systems hallucinate more, not less than older models, at rates as high as 79% in some tests. That’s not just ironic — it points to a deeper flaw in how we measure and understand intelligence in AI.

At Salesforce, we’ve studied this challenge through the lens of real-world business use and identified a pattern that the industry refers to as jagged intelligence. It describes the uneven capabilities of today’s models — systems that can excel at complex, high-level tasks, yet stumble on more basic ones. It’s like hiring an intern who writes brilliant code but forgets to save the file. An LLM can accurately summarize a multi-threaded support case, but follow up with an irrelevant product recommendation. It can cite policy documents chapter and verse, but misses that it’s referencing outdated guidance.

This unevenness creates a core tension — AI that seems smart enough to believe, but not consistent enough to fully trust.

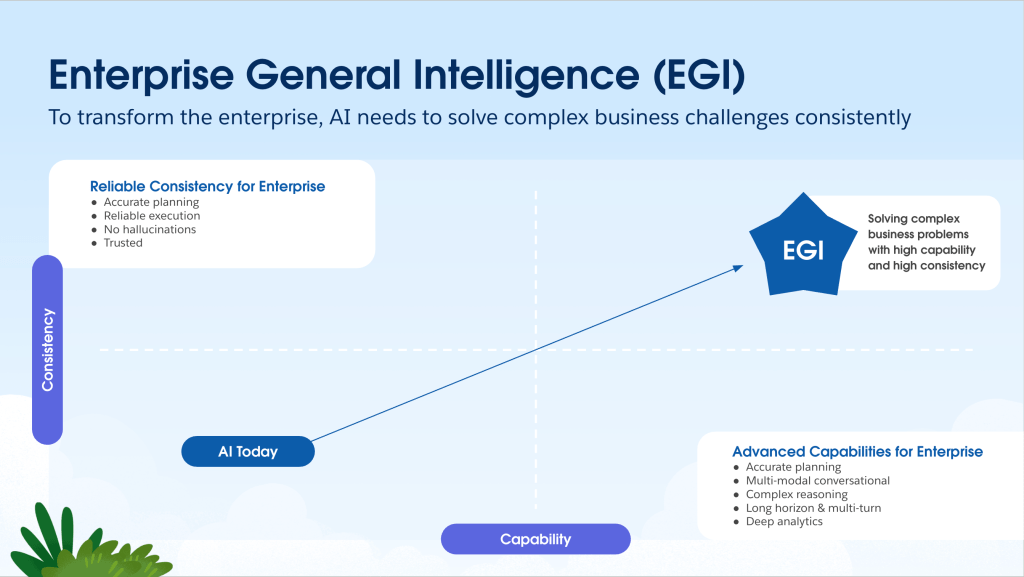

Tensions around hallucinations stand in sharp contrast to the ambiguous promise of artificial general intelligence (AGI) that so often dominates industry conversations. That’s why we believe it won’t be AGI, but Enterprise General Intelligence (EGI) that represents a tangible North Star: AI purpose-built for business, designed not only for advanced capability, but for consistency — delivering reliable performance, seamless integration, and trustworthy outcomes across complex systems.

Enterprise AI requires both high capability and high consistency

There has been a lot of focus on the capability of AI, but LLMs’ inconsistency today poses a substantial obstacle for enterprises looking to get up and running with their AI deployments, and a real risk, including eroded trust, damaged customer relationships, and potential financial or compliance consequences. While CIOs and IT leaders are enthusiastic about adopting agentic systems, they remain hesitant about widespread deployment due to concerns over these inconsistencies. Demos and lab results are proving to not be sufficient to test real world examples. Enterprises need a platform that ensures reliability, predictability, and accountability when interacting with customers — one that builds an enterprise-level architecture around these powerful AI models.

At Salesforce, we see EGI as a measurable goal, where we focus on a robust framework of AI tools and frameworks to achieve best-in-class level of performance and reliability in business-specific roles. This is how we create a path for a trusted, digital labor future, with humans at the helm.

Closing the gap: Turning AI’s potential into reliable performance

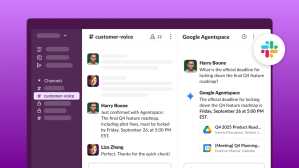

At Salesforce, solving this challenge has been a primary focus and top priority. Agentforce, the agentic layer of our deeply unified Salesforce Platform, is designed to power a limitless workforce of AI agents across every workflow, role, and industry. A core component of this deeply unified platform is the evolving Trust Layer, which ensures agents are deployed with the safety, consistency, and reliability that enterprises demand. The Trust Layer includes AI-specific guardrails such as toxicity detection, PII redaction, and grounding AI outputs in company-specific data, ensuring every interaction is safe, consistent, and reliable. Now featuring new updates for enhanced prompt injection and toxicity detection, the Trust Layer actively scans both incoming prompts and generated LLM responses for harmful content. This immediate toxicity scan yields a confidence score indicating the probability of inappropriate content. The system flags content based on distinct toxicity categories, enabling storage and review within Salesforce’s Data Cloud.

Enhancing Data Cloud’s capabilities, our Atlas Reasoning Engine enhances agents’ performance and capabilities by enabling proactive, data-driven actions and highly accurate information retrieval, improving the agent’s ability to handle dynamic and complex business tasks. Together, the Trust Layer and Atlas Reasoning Engine provide a secure foundation for businesses to address jagged intelligence. Salesforce AI Research is keeping Agentforce ahead of the curve by redefining what is possible in Enterprise AI.

With Agentforce, businesses are already building this foundation for reliability and consistency and are already seeing meaningful results.

For instance, 1-800Accountant implemented Agentforce to deflect a projected 50% of its incoming inquiries, setting the company up to scale well beyond tax season. Because Agentforce reasons across trusted internal and external data, including Salesforce data, internal guidebooks, IRS.gov, and more, customers receive tailored, timely answers 24/7. Initial results exceed expectations: Agentforce autonomously resolved 70% of chat engagements during tax season without the need for an appointment, case, or call. With the straightforward questions out of the way, internal teams are freed up to focus on critical tasks and strategic initiatives that support 1-800Accountant’s business growth.

While we still have a lot of work to do to achieve EGI, these early results are encouraging and an indication that we’re on the right path for a transformative future of digital labor.

Optimizing for EGI: Test, Measure, and Train

At Salesforce, we are dedicated to strengthening trust not only within our own products but also by providing industry-wide resources and tools that elevate AI standards across the board.

And at Salesforce AI Research, we keep pushing what’s possible in enterprise AI. A cornerstone of this effort is Salesforce’s CRM Benchmark, the first LLM benchmark designed specifically for CRM use cases. This benchmark evaluates models across critical dimensions such as accuracy, cost, speed, and trust and safety, using real business data and expert assessments to ensure AI agents meet professional standards before reaching customers.

However, to have meaningful improvements to AI for business, it’s not enough to measure LLMs’ performance on academic benchmarks. It’s important to test them in a real environment. But doing that in a secure and trusted way is key.

That’s why we developed CRMArena, a simulation environment to assess agent behavior in realistic CRM scenarios. In its initial phase, CRMArena replicates tasks for three key personas: service agents, analysts, and managers. Early results have shown that, even with guided prompting, agents succeed less than 65% of the time at executing function calls for these personas’ use cases, underscoring the gap in readiness for enterprise deployment.

CRMArena goes beyond basic accuracy tests, rigorously stress-testing AI agents in multi-turn business scenarios to evaluate their ability to complete tasks reliably, adapt to unexpected challenges, and maintain coherence throughout. This proactive testing helps uncover potential issues, ensuring that AI agents are truly ready for real-world deployment — much like a flight simulator prepares pilots for the complexities of actual flight.

We are also investing in other ways to measure and benchmark LLMs. This includes our recent dataset, SIMPLE, which helps quantify LLM jaggedness by tracking performance gaps to help guide the development of more reliable AI for enterprise applications.

Together, these innovations represent more than just incremental improvements — they form the blueprint for EGI. EGI is not about chasing abstract notions of human-level cognition; it’s about engineering AI that businesses can actually trust. It prioritizes consistency as much as capability, and reliability as much as reasoning. As AI becomes more deeply embedded in every layer of the enterprise, it’s this blend — advanced intelligence anchored by guardrails, context, and control — that will define success. Salesforce’s approach to trusted AI isn’t a waiting game for AGI. It’s a commitment to delivering EGI now — purpose-built, reliable, and ready for work.

Combating hallucinations: A platform approach to ensuring AI consistency and trust

The inconsistency of LLMs today poses a substantial business risk, including eroded trust, damaged customer relationships, and potential financial or compliance consequences. While CIOs and IT leaders are enthusiastic about adopting agentic systems, they remain hesitant about widespread deployment due to concerns over these inconsistencies. Demos and lab results are no longer enough. Enterprises need a platform that ensures reliability, predictability, and accountability — one that goes beyond powerful models to a system-level approach. This approach integrates retrieval, orchestration, reasoning, and human oversight to guarantee that AI is grounded in trusted capabilities and consistent performance.

Our comprehensive efforts, which include CRM-specific benchmarks, realistic stress testing and training environments, and foundational offerings like the Trust Layer and Atlas Reasoning Engine, tackle the core issues of jagged intelligence and hallucinations. By embedding these resources into the heart of Agentforce, we’re ensuring that enterprises can confidently rely on the safety and reliability of their AI solutions.

Together, these innovations represent more than just incremental improvements — they form the blueprint for EGI. EGI is not about chasing abstract notions of human-level cognition; it’s about engineering AI that businesses can trust. It prioritizes consistency as much as capability, and reliability as much as reasoning. As AI becomes more deeply embedded in every layer of the enterprise, it’s this blend — advanced intelligence anchored by guardrails, context, and control — that will define success. Salesforce’s approach to trusted AI isn’t a waiting game for AGI. It’s a commitment to delivering EGI now — purpose-built, reliable, and ready for work.

Go deeper:

- Learn how Salesforce AI Research is building AI models, benchmarks, and frameworks to enhance enterprise AI

- Read about Enterprise General Intelligence and the rise of agentic simulation testing and training environments

- Learn why LLMs and Copilots miss the mark for enterprise AI adoption