Editor’s Note: AI Cloud, Einstein GPT, and other cloud GPT products are now Einstein. For the latest on Salesforce Einstein, go here.

Generative AI is dominating the news cycle — and with good reason — as companies embrace opportunities to boost productivity and improve customer experiences in a myriad of ways.

But amid this innovation lurks a new risk for enterprises: How can they keep trusted data secure?

The latest findings from Salesforce’s Generative AI Snapshot Research Series, an ongoing research study of over 4,000 full-time employees, uncover that 73% of employees believe generative AI introduces new security risks, though most use or plan to use the technology. Despite these concerns, the research reveals that few know how to protect their companies from said risks.

While 61% of employees use or plan to use generative AI at work, nearly 60% of those who plan to use this technology don’t know how to do so using trusted data sources or while ensuring sensitive data is kept secure.*

Salesforce’s Generative AI Snapshot Research Series

Generative AI adoption is moving quickly — will transform customer relationships

Employees see the potential of generative AI and are already actively using or planning to use the technology. They note benefits including serving customers better and saving time as reasons to use generative AI.

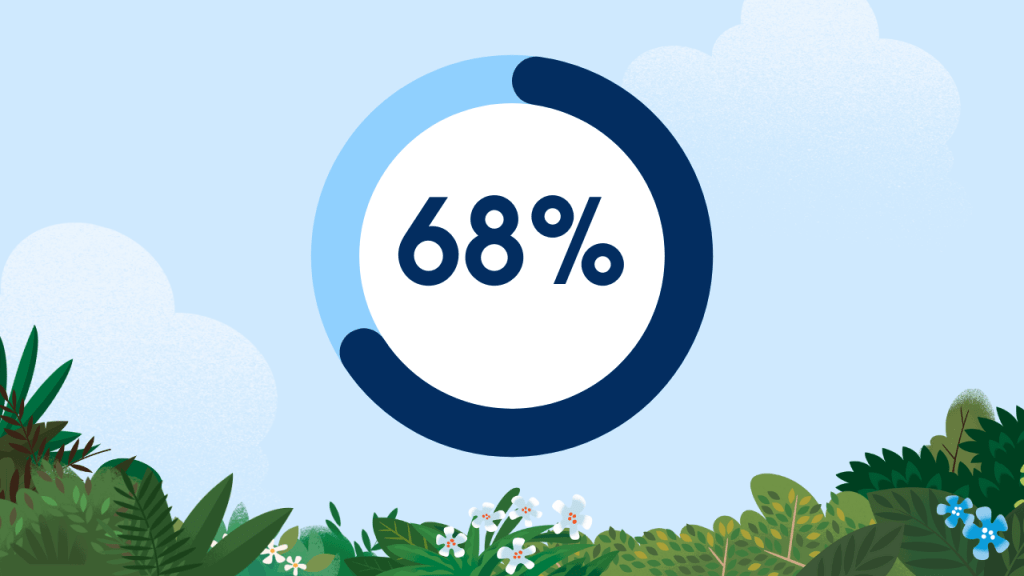

68% of employees say generative AI will help them better serve their customers.

67% say generative AI will help them get more out of their other technology investments, like other AI tools and machine-learning models.

Employees estimate saving an average of five hours a week — the equivalent of one month per year for full-time employees — using generative AI.**

Employees don’t yet know how to leverage trusted generative AI responsibly

As generative AI becomes more widely adopted, trust and security concerns surface. With little knowledge on how to leverage generative AI responsibly, employees risk inaccuracies, biases, and security issues.

Spotlight: Generative AI is set to impact workers’ day-to-day more than executives. Yet, research shows that leadership is overconfident in their ability to leverage generative AI responsibly — leaving individual contributors exposed to risk.

Trusted data, security, and building an ethical foundation are critical in establishing generative AI as a trusted technology

Employees say these four major elements are required to successfully use generative AI in their role and at their organization:

“Generative AI has the potential to help businesses connect with their audiences in new, more personalized ways,” said Paula Goldman, Chief Ethical and Humane Use Officer, Salesforce. “As companies embrace this technology, businesses need to ensure that there are ethical guidelines and guardrails in place for safe and secure development and use of generative AI.”

As companies embrace this technology, businesses need to ensure that there are ethical guidelines and guardrails in place for safe and secure development and use of generative AI.

Paula Goldman, Chief Ethical and HumanE USE Officer, Salesforce

More information

- Tune in to AI Day on Salesforce+ to learn more about the future of trusted enterprise AI

- Learn AI skills on Trailhead

- Read more about marketers’ perspectives on generative AI

Research methodology

Salesforce conducted a generative AI survey in partnership with YouGov May 18 – 25, 2023. The data above reflects responses from 4,135 full-time employees across sales, service, marketing, and commerce. The respondents represent companies of a variety of sizes and sectors in the United States, UK, and Australia. The survey took place online. The figures have been weighted and are representative of all US, UK, and Australian full-time employees (aged 18+).

*respondents were asked if they know how to “use generative AI safely (e.g., using trusted customer data sources and keeping first-party data secure)”

**(5 hours per week on average saved using ‘generative AI’ x 52 weeks in one year) / 8-hour working day = 32.5 days or a month per year saved on average