AI is a bit like the lotto: it’s easy to imagine the possibilities if it hits. But hitting the AI jackpot — a tech-powered utopia where AI assistants supercharge worker productivity and create new opportunities everywhere — hinges on one critical element: trust.

From researchers and data scientists to designers and futurists, some of Salesforce’s leading AI minds weigh in on why building a foundation of trust is AI’s number one job.

The promise of AI depends on getting trust right, and that work starts right now.

Great AI requires an understanding of humans

People have used AI for years — from unlocking phones with facial recognition and hailing a rideshare vehicle to matching with someone on a dating app.

These experiences are what Kat Holmes, Salesforce’s EVP and Chief Design Officer, calls “matchmaking,” marrying an individual’s needs with the technology’s capabilities.

“Machine intelligence and machine learning have been a key part of our society for a while and success happens when there’s a positive match with a person — a relevant result that feels like it was made for me,” she said.

Today, however, AI is in the midst of its second wave, which introduced generative AI and far-reaching implications for business and society. And it’s spurred renewed urgency to ensure that AI is built with trust at the center.

The question technologists and businesses are asking today is how.

Designing trusted AI

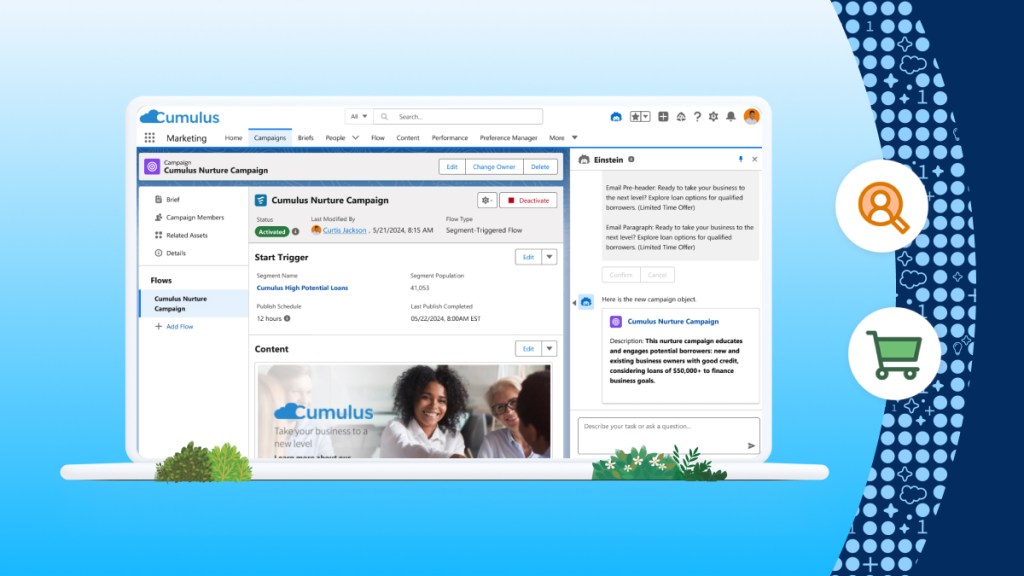

How developers design the user interface of AI is a key ingredient to establishing trust.

“One of the most important and challenging jobs of design is to help people take that first step into a new world of a new technology,” Holmes said. “People need simple proof that taking that leap of trust will work out.”

Don’t overthink this step, Holmes says.

“It could be a simple button that provides a recommendation,” she said. “That little, simple proof point is just like the first ‘hello,’ when people meet each other. It’s the moment of eye contact.”

That little, simple proof point is just like the first ‘hello,’ when people meet each other. It’s the moment of eye contact.

Kat Holmes, Salesforce’s EVP and Chief Design Officer

And then, just like with human beings, the next step to cultivating trust in AI is through continued interactions, like determining if an AI-powered output was useful or if it could be reused or improved.

“That becomes a reciprocal relationship between me and this technology,” she said. “After I try that first interaction the next step is being able to shape and improve that technology with my feedback. With these feedback loops, I start to build a cycle of trust that can lead to more complex and maybe more exciting scenarios down the line. But it all begins with a simple compelling invitation to try something new.”

How to earn workers’ trust in AI

Getting to that first step, however, means businesses need to galvanize their workforces around it.

A recent Harvard Business Review study found an AI trust gap: most people (57%) don’t trust AI and 22% are neutral. Research from Salesforce also shows that 68% of customers say that advances in AI make it even more important for companies to be trustworthy.

Fortunately, there are a few routes workers can take to earn trust in AI, according to Daniel Lim, Senior Director at Salesforce Futures — a team dedicated to helping the company and its customers anticipate, imagine, and shape the future.

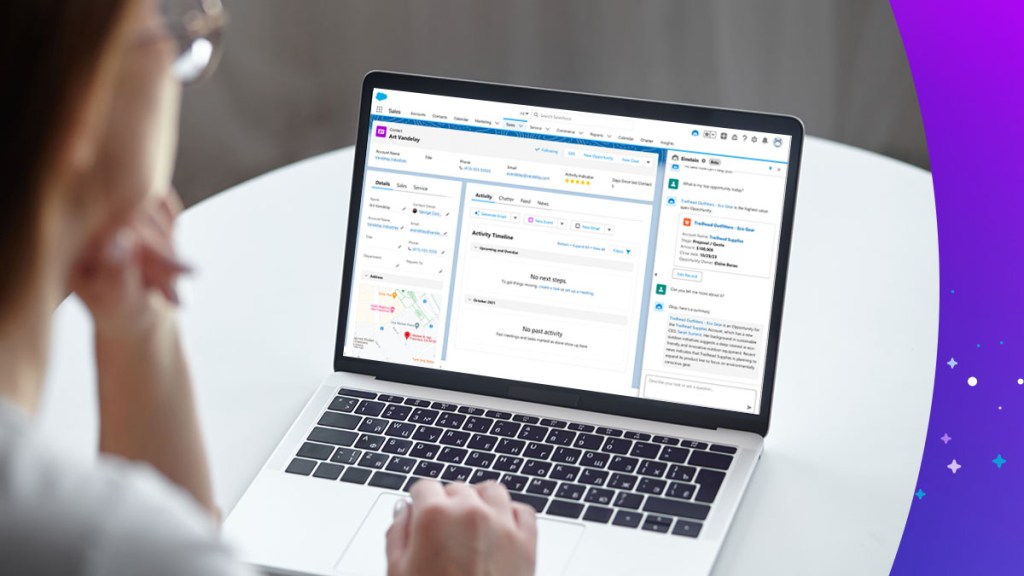

One key path is training. “People are more likely to trust AI when they feel they understand when and how AI is used and have sufficient skills to use the technology,” Lin said, pointing to a global study on trust in AI from the University of Queensland and KPMG. “Improving people’s AI literacy and skills can help to build trust.”

Another path is societal and institutional. People tend to delegate their trust to authoritative sources — for example, having confidence that their company or government has set up safeguards for a technology.

That’s one reason why Lim says it’s incumbent on businesses to provide training and establish safeguards around when to use AI and when not to. “That’s the starting point for the conversation for whether employees will trust AI in the workplace.”

Organizations must also ensure that humans remain at the helm, orchestrating AI and providing oversight of the model, when and where appropriate. As part of its commitment to responsible and trusted AI, for example, Salesforce has launched several training courses on Trailhead to help users uplevel their knowledge and ability to responsibly use AI.

Lastly, there’s no substitute for using the technology. “As with any technology, whether it’s a tool like a fork or a hammer,” Lim said, “trust is earned when a technology proves to be beneficial over time.”

Trusting AI isn’t something that gets solved in a boardroom. “Adapting to a changing environment isn’t something a company does,” Wharton professor Adam Grant writes in Think Again, “It’s something that people do in the multitude of decisions they make every day.”

Building AI that overcomes technical concerns

Human beings have always forged new technology to advance society, and it hasn’t always been a smooth road, said Muralidhar Krishnaprasad, EVP of Engineering at Salesforce.

“It’s important to recognize when these disruptive trends arise, there’s always fear of what can happen,” Krishnaprasad said. “But at the same time, if you ignore it, you may not exist as a company or as a business because it’s such a disruptive change.”

It’s important to recognize when these disruptive trends arise, there’s always fear of what can happen… But at the same time, if you ignore it, you may not exist as a company or as a business because it’s such a disruptive change.

Muralidhar Krishnaprasad, EVP of Engineering at Salesforce

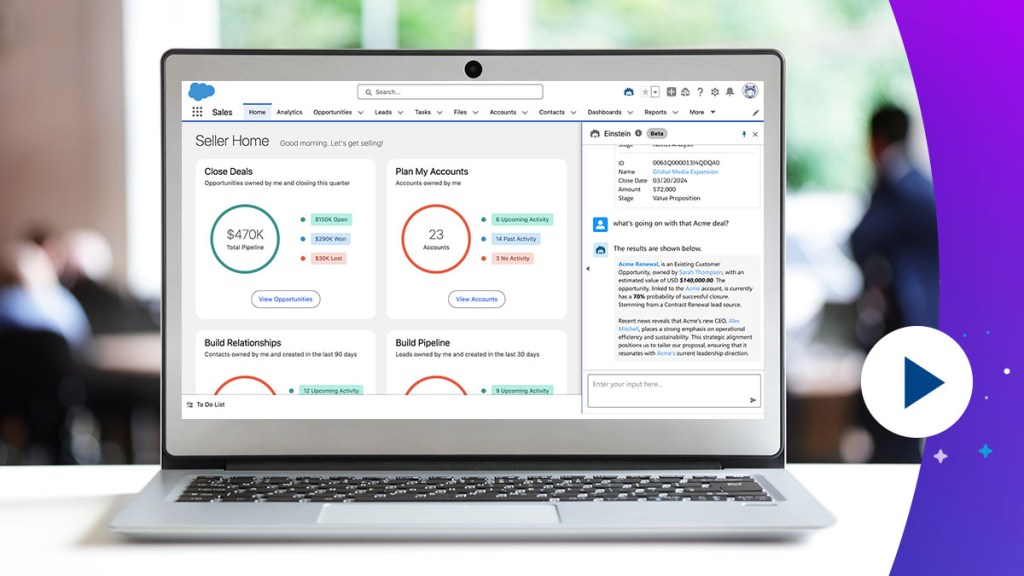

To address concerns over AI hallucinations, and toxic or biased outputs, Krishnaprasad stressed the need to ensure models are grounded in high-quality data — especially when it comes to generative AI.

“With generative AI in particular, because the whole model is built to be creative — it tries to create a lot of stuff even if it doesn’t exist,” he said. “It’s really important for you to make sure, number one, start with the right data and grounding. If you’re going to ask a question, make sure you know what data you want to feed it to get the answers.”

Users should also understand where their data is going when using generative AI — notably, if the Large Language Model (LLM) behind the tool is capturing their data for training. “Grounding is also important for security because if you give all your models to an LLM, that LLM provider is going to suck all your data and incorporate it into the model which means your data is now public domain.”

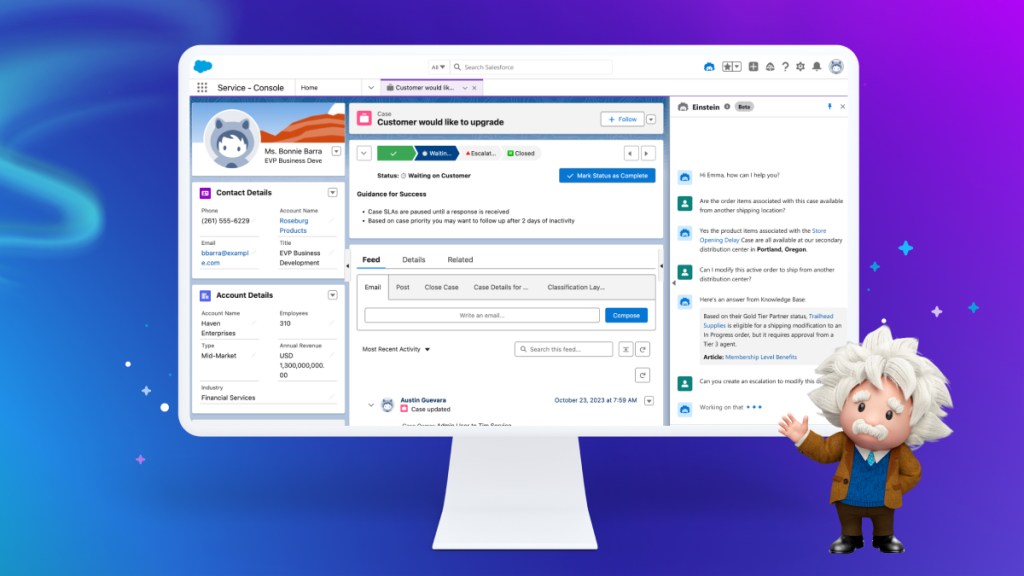

To eliminate this concern, Salesforce has instituted a zero-retention policy, meaning no customer data is ever retained by third-party LLM providers. The company’s Einstein Trust Layer, announced in 2023, is an answer to the question, “How do I trust generative AI?” In short, it masks personally identifiable information (PII) to ensure secure data doesn’t go to an LLM provider, allowing teams to benefit from generative AI without compromising their customer data.

Additionally, prompt engineering — what tasks users ask AI to perform — is crucial for ensuring accurate AI outputs. Beyond telling a generative AI model what to create, prompts allow users to limit what data the models use so they can improve the accuracy of outputs.

Trustworthy AI is ethical AI

For all the promise AI holds, it is not without risks.

Paula Goldman, Chief Ethical and Humane Use Officer, and Kathy Baxter, Principal Architect for Salesforce’s Ethical AI practice, warned in a co-authored article about the need to “prioritize responsible innovation to help guide how this transformative technology can and should be used — and ensure that our employees, partners, and customers have the tools they need to develop and use these technologies safely, accurately, and ethically.”

In their article, Goldman and Baxter note Salesforce’s five guidelines for developing trusted generative AI, which include accuracy, safety, honesty, empowerment, and sustainability.

Kai Nunez, Salesforce’s VP of Research & Insights, also highlighted the need for a technologist’s Hippocratic Oath.

“We must become more responsible as technologists in order to deliver products that do no harm. We must be held accountable for the features, functionality, or code that we put out into the world,” she said. “AI technology leaders can minimize bias by weaving ethics into the product development lifecycle at every stage. It cannot be an afterthought. It must be a part of the definition of done.”

While there have been incidents of AI generating content that reflect stereotypes and biases, Nunez said that’s exactly why AI development must be inclusive, particularly among historically underrepresented groups — and why more work needs to be done to earn their trust in the technology. Trust is built through representation. People prefer to use tools that reflect their voice, their needs, and their unique experiences. The inclusion of the experiences of these underrepresented groups is fundamentally necessary in order to avoid creating a vicious cycle of underrepresentation in the models.

Building trustworthy AI

On the other hand, Holmes also sees a road to greater accessibility for roles and a democratization of tech employment. As AI models become increasingly capable of shouldering data and analytics work, people who lack those skills and were previously unable to consider various tech roles can now bring their unique perspective to them.

“We want people from all backgrounds to be able to come into technology and contribute to the shape and design of it,” Holmes said. “AI is the opportunity to open up that door in a really broad way and have contributors from all backgrounds.”

We want people from all backgrounds to be able to come into technology and contribute to the shape and design of it… AI is the opportunity to open up that door in a really broad way and have contributors from all backgrounds.

Kat Holmes, Salesforce’s EVP and Chief Design Officer

Holmes continued: “It all comes down to that front door that we create and how welcoming that experience is once people come in and start using AI. If people feel like they belong in the middle of this revolution, they’re going to contribute to shaping it for the future.”

Salesforce’s Chief Scientist of AI Research Silvio Savarese has also outlined specific practices for building trusted AI.

Savarese says more must be done to build awareness among users so they understand AI’s strengths and weaknesses. AI models themselves can help with this, flagging how confident the model is that a given output is correct.

And, AI models should flag to users what data a response was based on to address concerns of trust and accuracy directly. Essentially, transparency and explainability are key.

Putting together the puzzle pieces of trust

To ensure all stakeholders — from employees and customers to regulators and the general public — can place their trust in AI, many pieces of a complicated puzzle must come together. Fortunately, companies don’t need to reinvent the wheel — the path to trust for AI is well-worn by the technologies and big ideas that preceded it.

So, perhaps AI isn’t at all like the lotto. Companies and leaders can make their own AI luck, simply by following in the trusted footprints of others.

Go deeper

- Learn more about Trusted AI at Salesforce

- Read how to build trusted generative AI

- Learn more about Salesforce Data Cloud

- Explore AI courses on Trailhead