Imagine missing out on a deal with an excellent vendor for the distribution of your products, just because their location is tagged as ‘non-serviceable’ by your AI. Or a self-employed professional being denied a loan simply because they don’t have a corporate email, while their friend’s application with the same income but a 9-to-5 job gets approved instantly.

These aren’t just technical glitches. They’re real-world consequences of AI bias.

From automating customer support to creating marketing narratives to hiring choices, businesses depend on AI for everything. But as powerful as AI and its impact is, it isn’t immune to flaws. One of the most critical among these is AI bias- when prejudice creeps into choices. This brings us to an important point: What is AI bias?

Understanding AI bias

AI bias refers to systematic errors in AI outputs that unfairly favour or disfavour certain individuals or groups, often due to their gender, age, race, or socioeconomic background. Simply put, when AI algorithms are trained on imbalanced data, they will reflect—and then amplify—the biases seen in human society.

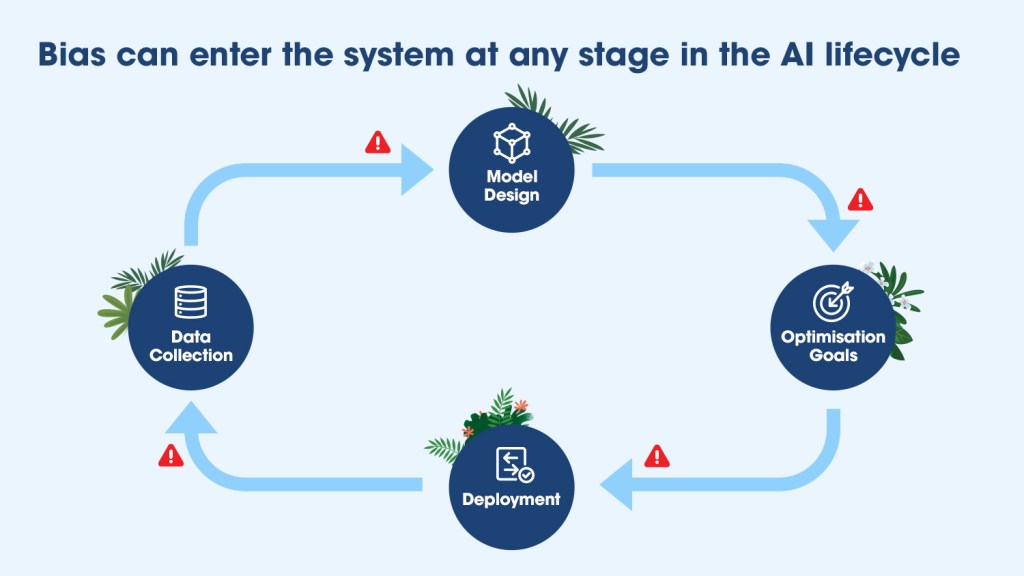

AI is not intentionally biased; bias does not start with the machine- it starts with us. From the datasets we feed into the machine to the objectives we optimise the algorithm for, bias can creep into any stage of the AI solutions lifecycle.

How does AI bias happen?

AI models are trained on historical data to detect patterns and make predictions. If that data is biased or the model misinterprets patterns, those flaws will show up in the system’s decisions.

For instance, if an AI model is trained on historical hiring data of a company that predominantly hired men, it may learn to favour male candidates even when women have equal qualifications.

Explore Trailhead to learn more about the different types of bias in AI.

How does bias enter the system?

Bias can sneak in at many points in the AI lifecycle. It could be:

- In the assumptions– Often, unintentional biases stem from early assumptions about who the system is for and how it should work. Including diverse voices early can reduce this blind spot.

- In the data – What we choose to include or exclude matters. If past hiring data is skewed, for example, the model will likely carry that forward.

- In the algorithms – The features we train on—like zip code or first name—can act as hidden proxies for race, gender, or nationality, leading to unfair outcomes. Read more about identifying attributes that can introduce bias here.

- In the context – How and where AI is deployed changes everything. A model might perform well in one region but behave very differently elsewhere.

- In the feedback loop – Without a way for users to give feedback or correct errors, biased patterns can go unchecked. That’s why human oversight matters.

Each stage presents a unique opportunity for bias to slip in—and go undetected until it causes harm.

Tracing the roots: What causes AI bias?

If we can accurately understand the origins of AI bias, we can identify and prevent it more easily. Most sources of bias fall into three categories: data, design, and social influences. Each of these plays a unique role in shaping how the bias manifests in AI systems.

Data biases

Bias in training data is one of the most common and persistent sources of AI bias. Data bias can take many forms. When certain groups are underrepresented, mislabeled, or misrepresented in training data, the AI system learns from those gaps. As a result, it may overlook important differences, make inaccurate predictions, or reflect unfair stereotypes. This becomes a bigger problem when biased patterns in historical data—like who got hired, promoted, or approved for loans—are treated as neutral facts, even though they reflect real-world inequalities.

For example, historical hiring data that underrepresents women and minority candidates is an example of a data bias.

Why it matters: If the data does not actually represent diverse, real-world users, the AI systems will fail to serve them equally. In extreme cases, such biased outputs can reinforce harmful stereotypes or marginalise vulnerable groups.

Dive deeper into how data bias shapes AI outcomes. Learn with Trailhead.

Algorithm design biases

Even with clean data, algorithms can introduce bias. The assumptions we make while designing models—what features we include, what we optimise for, and how we measure accuracy—can all influence the outcome. Or when algorithms are trained only to chase clicks or shares, they often ignore whether the outcomes are fair or balanced.

For example, a loan approval algorithm might reject applicants from certain zip codes—not because of their creditworthiness but because those areas have historically been lower-income or predominantly minority communities.

Why it matters: Bias in model design can favour short-term gains over ethical considerations.

Societal and institutional biases

AI is built within a social context. If our institutions have systemic biases, those can be codified into AI. For example, a credit risk model trained on historical data might rate applicants from certain neighbourhoods as higher risk—not because of their individual financial behaviour, but due to systemic issues like lack of access to banking services in those areas. This reflects deeper societal inequalities baked into the data.

Why it matters: Without appropriate safeguards, AI systems can scale the very inequalities they are meant to resolve.

Why is it so hard to eliminate AI bias?

Removing AI bias from systems is no easy task- it faces a mix of cultural, technical, and operational challenges. These include:

- The black box problem: In advanced AI models, especially deep learning systems, the outputs are notoriously difficult to interpret. Sometimes, even developers struggle to explain why an AI model chose the output it chose. Due to this lack of transparency, it is difficult to detect, audit, and fix biased behaviour.

- Model performance tradeoffs: Sometimes, making a fair model means reducing the overall accuracy. For example, tweaking an algorithm to reduce gender bias might lower its performance on some other metric. Due to this tradeoff, some developers might be discouraged from prioritising fairness unless explicitly mandated by regulatory priorities.

- Bias is not always obvious: Sometimes, bias shows up only under specific conditions, in certain demographics, or after long-term use. Without continuous monitoring, these patterns will go unnoticed.

- Lack of standardised fairness metrics: In AI, there is no specific definition of what ‘fair’ means. Different contexts demand different fairness goals; without industry-wise standards, there is room for inconsistency.

- Organisational gaps: Fairness is not just a technical problem; it is a cross-functional one. Data scientists, designers, ethicists, and business leaders should all be involved in ensuring fair AI outputs. Without coordinated ownership, efforts to mitigate bias may remain superficial.

The business case: why you should care about fixing AI bias

As AI, especially generative AI, increasingly shapes decisions across functions, ignoring AI bias is not just an ethical issue- it’s now a business risk. Here are some reasons why businesses should engage in solving the AI bias problem:

- Reputational risk: Biased AI systems can lead to public backlash, regulatory scrutiny, or media scandals. In fact, customers are increasingly sceptical of opaque AI that can make biased decisions. The 7th edition of Salesforce State of the Connected Customer report revealed that transparency into how AI is used and explainability of outputs are major factors that can increase customer trust in AI.

- Legal liability: Governments worldwide are rolling out policies to ensure AI fairness and accountability. Discriminatory outcomes can lead to companies being on the wrong side of these emerging data privacy and anti-discrimination laws.

- Financial impact: Bias can affect business performance by alienating or underserving large customer segments. If your AI tools consistently exclude or misjudge certain demographics, it can impact customer satisfaction, safety, and ultimately, revenue.

- Employee morale: If employees see their company ignoring AI bias, it can lead to frustration and disengagement. People want to work for organisations that build fair, responsible tech they can stand behind.

- Long-term strategic advantage: Ignoring AI bias today can slow down innovation tomorrow. Tackling it early helps businesses build scalable, inclusive systems that are future-ready.

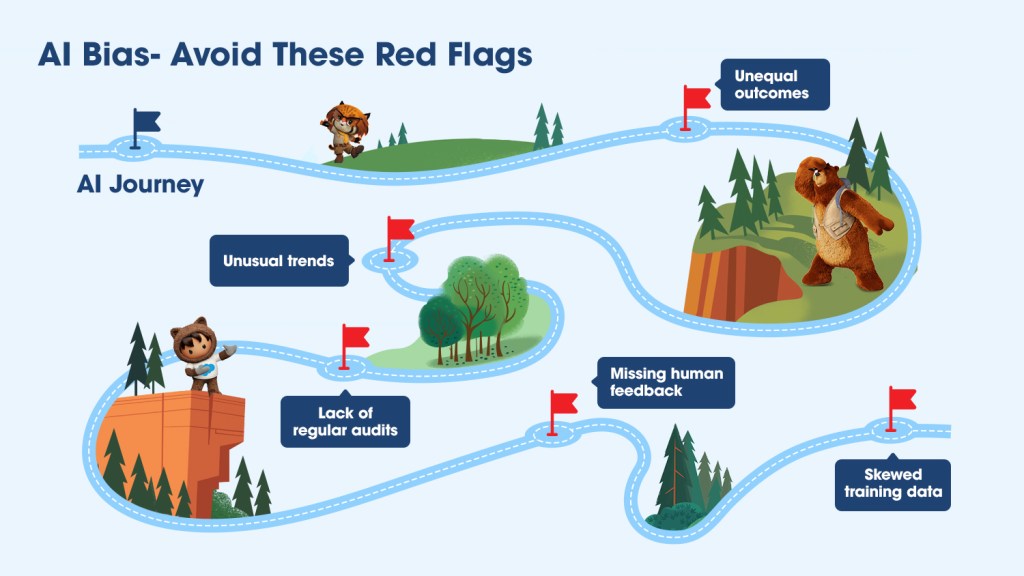

Spot the red flags: How to identify AI bias in your system

You cannot fix what you cannot see. The first critical step in resolving AI bias is knowing where to look.

- Analyse outcomes across demographics: Track how your AI systems perform for different user groups. Are there patterns in who gets hired, approved, recommended, or rejected? Note discrepancies, if any, and ask multiple demographic groups to review the outcomes as well.

- Monitor for anomalies: Unusual trends, such as a sudden drop in conversions for a specific segment, can point to systematic bias. Treat these anomalies as warning signs.

- Regularly audit AI models: Deploy fairness and accountability frameworks to evaluate your models. Schedule periodic reviews, which include qualitative and quantitative testing.

- Request human feedback: Sometimes, bias can be invisible to code but obvious to frontline users and customers. Establish open feedback loops so that your users can flag unfair experiences.

- Evaluate training data: If your inputs are skewed, the outputs will be too. Review the input datasets for quality and representativeness, especially across race, gender, age, and geography.

Fixing AI bias: A blend of strategy and technique

Identifying AI bias is only half the battle. The next step is to fix the diagnosed issues. Here are some proven ways organisations can reduce AI bias and build fairer, more transparent systems.

| What to do | Why it matters | How it helps |

| Diversify your training data | Ensures your AI models represent the full spectrum of the people they serve. | Reduces skewed outcomes and improves fairness for underrepresented groups. |

| Use fairness-aware algorithms | Optimise not just for performance, but for equity and fairness. | Helps mitigate bias at the model level by incorporating fairness constraints. |

| Deploy human-in-the-loop safeguards | Keeps humans involved in high-stakes decisions, especially where bias could have real-world consequences. | Builds customer trust—71% of customers prefer a human to validate AI decisions (Salesforce report). |

| Leverage bias detection tools | Tools like IBM Fairness 360 or Google’s What-If Tool can surface hidden biases in your models. | Provides measurable insights into where bias exists and how to address it. |

| Conduct statistical and visual checks | Use techniques like descriptive statistics and data visualisation to spot anomalies or skewed patterns. | Makes data issues visible early in the process, before they impact model performance. |

| Involve diverse teams | Your AI is only as inclusive as the team behind it. | Different lived experiences help surface blind spots and identify unfair outcomes. |

| Validate with external benchmarks | Compare your internal data with independent, credible sources. | Helps ensure your model isn’t operating within a bubble of bias. |

Want to go a step further in building ethical, responsible AI systems?

Explore Trailhead to understand key concepts and best practices.

Conclusion

When it comes to AI, accuracy and innovation aren’t enough. The systems we build must also be accountable, equitable, and safe—for everyone. At Salesforce, that responsibility is woven into every layer of our AI strategy. Our Office of Ethical and Humane Use guides this work, helping us design AI that’s not only powerful, but also trustworthy and transparent.

Whether it’s through robust internal frameworks or real-time features in our products, we aim to spot and correct bias before it causes harm. Take Einstein Discovery, for example—it helps users proactively detect bias, flag sensitive or proxy variables, and reduce disparate impact in predictions. Tools like model cards make these choices visible, so users understand how and why a model was built.

We also apply rigorous product design principles—model safety testing, in-app guidance, adversarial testing, and transparent disclosures—so our customers can use AI with greater confidence. Grounded in five core principles—Accuracy, Safety, Transparency, Empowerment, and Sustainability—our approach to Responsible AI is as much about human dignity as it is about data.

Frequently Asked Questions (FAQs)

Biased AI can lead to unfair outcomes, harm customers, and damage your brand’s credibility. It can also lead to legal complications and lost opportunities with underserved markets.

No system is perfect. But with the right data practices, transparency, and regular checks, businesses can significantly reduce bias and make AI more fair and reliable.

Organisations that fail to address AI bias can face regulatory setbacks, negative press, loss of customer trust, and missed growth opportunities—especially in communities that feel excluded by your systems.

Start by looking for unusual or inconsistent patterns in your AI’s outputs across different groups. Use fairness audit tools and track performance gaps to surface hidden bias.

Diversify your training data, use bias-aware algorithms, involve people from varied backgrounds in development, and adopt tools like Salesforce’s Einstein Discovery to flag and fix issues early.