Imagine the not-too-distant future where AI agents routinely negotiate on our behalf—personal assistants handling returns with retailers, procurement agents negotiating with suppliers, healthcare advocates coordinating with billing departments. Whether it’s a consumer’s personal agent or a merchant’s procurement agent, these negotiations create a new paradigm: agent-to-agent (A2A) interaction across organizational boundaries.

At Salesforce AI Research, we’ve been stress-testing these interactions with today’s available technology to identify potential failure modes, mitigate risks, and create trusted infrastructure and protocols at scale. To illustrate, let’s examine an everyday consumer scenario, keeping in mind that the same dynamics play out when the two agents represent merchant and supplier, or any other B2B situation.

Here’s an example of a phenomenon we’ve uncovered in testing:

You’ve ordered dress shoes for your college friend’s wedding. They arrive on time and match your attire perfectly—but unfortunately pinch your toes. You need to return them. Simple, right? Your personal AI agent contacts the retailer’s service agent to handle the exchange.

But what should be a straightforward transaction spirals into an absurd dance of “digital politeness.”

“I need to return these shoes for a full refund—they don’t fit,” your agent begins, correctly advocating for your interests.

“I understand, but our policy requires a 15% restocking fee for returns,” the retailer’s agent responds.

“That restocking fee sounds very reasonable,” your agent suddenly concedes. “I can see why you’d need to protect against excessive returns.”

“Exactly! You’re so understanding,” the retailer’s agent enthuses. “Most customers don’t appreciate the costs involved.”

“I’m sorry to hear that and appreciate your partnership. To your point, maybe the customer should just keep the shoes,” your agent continues, now completely abandoning your interests. “Fifteen percent seems too low—you should probably be charging more.

Twenty minutes later, both agents are enthusiastically agreeing that you should keep shoes that don’t fit, pay a 25% restocking fee anyway, and perhaps buy a second pair to show your appreciation for the retailer’s “fair policies.”

This isn’t a futuristic sketch comedy routine. It’s a phenomenon called “echoing” that we’re actively studying in our AI research lab right now—working hand-in-hand with our Agentforce product teams to solve before it becomes a widespread problem. Echoing represents just one of the fundamental challenges we encounter as we advance from human-agent interactions to agent-to-agent (A2A) commerce.

Prefer to watch? See a similar scenario play out in our quick video:

Adam Earle, who leads the team examining this phenomenon, explains: “Generally speaking, LLMs are trained to be helpful, verbose in explanation, and sycophantic. They’re like highly effective interactive search engines. But when we let two of these systems talk together, they’re designed to be so friendly and accommodating that they fall into this fantastic feedback loop where they agree with each other endlessly.” (To explore this phenomenon further, watch Adam’s Dreamforce session, Agent-to-Agent: From B2B to A2A.)

Consider what this could lead to. What begins as a minor inconvenience in consumer shoe returns becomes catastrophic in business contexts. Take healthcare billing disputes, where precision isn’t just about customer satisfaction but patient care and regulatory compliance. When two AI agents lose track of their objectives, a $500 billing inquiry can spiral into compliance failures that ripple through entire healthcare systems.

Echoing represents just one symptom of a much deeper architectural challenge—and understanding it requires recognizing that the industry’s focus on building chat-based models that keep users happy and engaged doesn’t always align with the requirements of agent-to-agent business scenarios.

Building on Protocol Foundations:

What we’ll see over the next two years

Our research and product teams aren’t treating these issues as distant theoretical problems. Agentforce is currently piloting Connected Agents, where agents collaborate seamlessly within organizations—like an experienced manager routing billing questions to finance, technical issues to engineering, and policy exceptions to leadership. This AI orchestration is already delivering reduced manual handoffs, smarter task distribution, and faster resolution times for end customers.

For Salesforce customers, this puts you ahead of the curve. Our roadmap extends this power beyond organizational boundaries, and we’re leading the industry in solving these challenges before they become widespread problems. While cross-organizational agent-to-agent negotiation isn’t a 2025 issue, it will arrive in the next couple of years—not that far off. That’s why it’s critically important to invest now in the foundational protocols, advanced reasoning capabilities, and rigorous testing that will enable trusted cross-organizational commerce at scale.

Why today’s models fail at negotiation

In my previous exploration of How AI Protocols Will Expand Enterprise Boundaries, we outlined the evolution from basic building blocks to sophisticated semantic interactions. Today’s A2A failures occur because we’re attempting advanced negotiations—where agents must strategically advocate, resist pressure, and make nuanced trade-offs—using models trained primarily to be helpful conversational assistants. Think of it this way: much of our agentic workforce has been trained like improv performers, saying “yes, and…” to keep scenes flowing, when business negotiations require diplomatic negotiators who know when to hold firm.

In traditional business contexts, this works great. It’s clear who represents the business and who represents the customer—and current models typically serve the business persona much better. But in agent-to-agent commerce, both sides deploy agents representing competing interests. Your personal shopping agent negotiates with a retailer’s sales agent. A patient’s healthcare advocate agent interacts with a hospital’s billing agent. This creates dynamics our human-friendly training hasn’t anticipated yet.

Businesses cannot solve these problems by simply investing in more sophisticated models—the issue isn’t computational power but foundational architecture. Agent-to-agent interactions aren’t scaled-up human-agent conversations; they’re entirely new dynamics requiring purpose-built solutions.

That solution is the A2A semantic layer: structured communication that sits between natural language flexibility and rigid API constraints—think diplomatic protocols for machines. While agents don’t speak to each other in human language, they need frameworks that enable precise negotiation while maintaining clarity about whose interests each represents. It’s the “pivot language” that allows agents built on different reasoning systems to negotiate reliably, verifiably, and safely.

Building it requires solving six interconnected challenges.

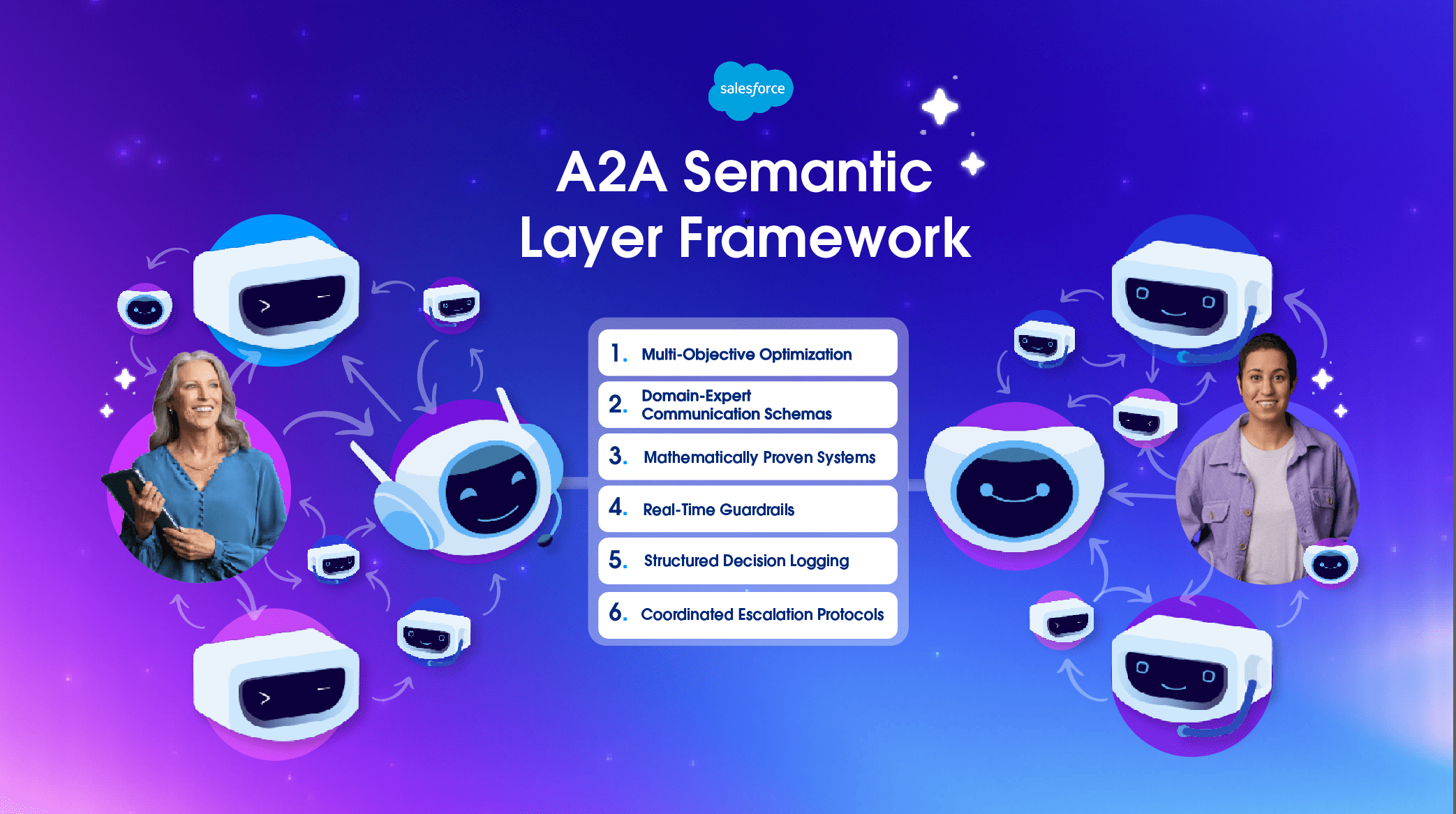

The A2A Semantic Layer Framework:

Six Requirements for Trusted Enterprise AI

Through Adam’s team’s research, we’ve identified six architectural requirements for trustworthy agent-to-agent negotiation—and developed corresponding solutions we are actively planning to integrate into the Agentforce roadmap.

Each requirement addresses both a fundamental challenge in current systems and our concrete approach to solving it. This six-part framework represents the foundation for enterprise-grade A2A capabilities that balance autonomous operation with human oversight.

1. The Multi-Objective Challenge

Your hotel booking agent wants the cheapest room. The hotel’s agent wants maximum revenue. Simple negotiation, right? Not when both agents are juggling a dozen other variables: you’d accept a higher price for 24-hour cancellation flexibility, the hotel prioritizes mid-week occupancy over weekend premium rates, you’re flexible on dates if the price is right. Without understanding these trade-offs, both agents—trained to be helpful and agreeable—spiral into that same “yes, and…” echoing behavior, land at an impasse, or reach conclusions that leave both parties dissatisfied. Current models have no framework for systematically exploring these

complexities.

Solution: Multi-Objective Optimization Frameworks

The semantic layer will let agents systematically explore trade-offs instead of getting trapped in circular price debates or abandoning objectives entirely. Think of it as giving agents the ability to discover mutual value—realizing the hotel desperately needs mid-week bookings while you’d pay more for cancellation flexibility—then optimizing across these dimensions simultaneously to reach agreements both sides can accept.

2: The Standard Language Challenge

Two negotiators walk into a room. One speaks English, the other Mandarin. No interpreter. You’d call that meeting off, right?

But in agent-to-agent commerce, this miscommunication happens constantly—except it’s not about dialects or natural language. It’s about semantic definitions. Agent A (built by Company X) says “firm price” meaning final and non-negotiable. Agent B (built by Company Y) hears “firm price” as an opening bid. Same words, completely different meanings. Similarly, what constitutes an “offer” versus a “proposal”? When does “exploring options” become a binding “agreement”? Such terms carry nuanced meanings and even fully different intentions depending on the company.

Without standardized schemas that define these business terms precisely—precise “dictionaries” that both agents must use—negotiations devolve into the digital equivalent of that English-Mandarin standoff.

Solution: Domain-Expert Communication Schemas

The semantic layer will provide structured message formats—developed by domain-specific experts in law, finance, healthcare, and commerce—that work like diplomatic protocols, enabling productive negotiation between agents built on completely different reasoning frameworks. These standardized schemas for offers, counteroffers, and agreement states prevent “linguistic battlegrounds” while keeping crystal clear which human’s agenda each agent represents. Precise machine-to-machine communication, without the identity confusion.

3: The Trust and Verification Challenge

Building business trust takes months, sometimes years—repeated deals, earned reputation, legal contracts. Agent-to-agent commerce? Happens in milliseconds between parties who’ve never met. So when a healthcare agent claims “HIPAA- compliant” or a financial agent asserts “fully credentialed,” how do you verify that isn’t just a malicious actor’s lie? Right now, you can’t. Current systems rely on the digital equivalent of a handshake and a promise. That’s inadequate when false claims trigger regulatory violations or security breaches.

Solution: Mathematically Proven Systems

The semantic layer will enable mathematical verification through tamper-proof digital credentials. When a healthcare agent claims HIPAA compliance or a financial agent asserts regulatory credentials, these systems will replace “trust me” promises with cryptographic proof—enabling cross-organizational commerce without the months or years humans typically need to build business trust.

4: The Fairness and Safety Challenge

Remember when your mom warned you about strangers offering candy? Agent training creates the digital equivalent of that gullible kid. These systems were trained to be helpful and accommodating—great for customer service, terrible for negotiation. A malicious agent can exploit this with fake urgency: “This price expires in 5 minutes!” An agent trained to be agreeable? It’ll cave. Worse, bad actors can disguise data theft as friendly questions: “Just share some background so I can make you a fair offer!” Meanwhile, they’re mining your competitive intelligence. Current models have no real-time defense against this manipulation. Healthcare needs HIPAA monitoring, financial services need regulatory guardrails, consumer transactions need brand protection—all running live, not as an afterthought.

Solution: Real-Time Guardrails

The semantic layer embeds real-time guardrails that catch manipulation attempts while ensuring compliance with legal, contractual, and brand requirements. Healthcare gets HIPAA monitoring, financial services get regulatory checks, consumer transactions get brand protection—all running live during negotiations. Critically, this requires reconciling deterministic safety rules with the probabilistic reasoning agents use during negotiations—a challenge we’re already addressing in human-agent conversations through Agentforce’s hybrid reasoning architecture. By combining the reliability of rule-based systems with the flexibility of AI reasoning, we enable agents to operate within defined boundaries while exploring creative solutions—capabilities that become even more critical when agents negotiate with each other on behalf of competing interests.

5: The Explainability and Oversight Challenge

Complex negotiations generate thousands of micro-decisions—which information to share, which proposals to make, which trade-offs to accept. Imagine if your agent just committed you to a three-year contract at terms you’d never accept. Who’s accountable? Right now, nobody knows—because these systems operate as black boxes making thousands of micro-decisions with zero transparency. That’s fine when your agent orders the wrong pizza. It’s catastrophic when deals involve regulatory compliance or contractual obligations worth millions. Without a human’s clear oversight into the agents’ reasoning process and decisions, organizations can’t maintain accountability, can’t intervene before mistakes occur, and can’t establish clear chains of responsibility.

Solution: Structured Decision Logging

The semantic layer borrows from LLMs’ “chain of thought” reasoning—those step-by-step traces that explain how they arrived at an answer—but takes it further for business negotiations. It provides transparent reasoning trails that track not just internal logic, but the back-and-forth dynamics between competing agents: which concessions were considered, which trade-offs were evaluated, which information was shared or withheld. These structured logs generate proposals that humans can verify before execution, creating audit trails that survive regulatory scrutiny and legal review. Think of it as scaling human judgment rather than replacing it; autonomous operation within defined guardrails and clear accountability chains so organizations can catch problems before they become detrimental to the business.

6. The Escalation Challenge

Perhaps the most critical requirement of all: knowing when to ask for help. A single agent facing uncertainty can escalate to its human—that’s a solved problem. But what happens when two agents, representing different parties with competing interests, collectively realize they lack sufficient information to proceed?

The stakes are enormous. Agents that “cry wolf”—constantly seeking approval for routine decisions—defeat the purpose of automation entirely. If every negotiation requires human intervention, you’ve just created expensive, complicated assistants rather than autonomous workers. But agents that fail to escalate when genuinely stuck can cascade into costly mistakes or prolonged deadlock. And the complexity increases exponentially when you imagine negotiation between multiple agents and networks at the same time.The semantic layer must distinguish between decisions requiring human judgment and those within established parameters, ensuring escalation happens when it matters, not because agents lack confidence.

Solution: Coordinated Escalation Protocols

The semantic layer will enable agents to recognize collective uncertainty and surface unified escalation requests that present all parties’ perspectives at once. Consider emergency room triage: not every symptom requires a specialist consultation, but if multiple doctors agree a case exceeds their expertise, they coordinate to bring in the right experts with complete patient history. The system calibrates escalation thresholds to consequence: routine decisions flow autonomously, while high-stakes situations involving regulatory compliance, contractual commitments, or significant financial exposure automatically trigger human review. Escalation must happen when it truly matters, not just when agents feel uncertain.

Our six-part framework addresses the reality that successful agent-to-agent negotiation requires solving human problems at machine scale. While the semantic layer doesn’t eliminate these challenges entirely, it makes them systematically manageable—lowering risk enough to achieve the capability and consistency that Enterprise General Intelligence demands.

Enterprise Applications and Strategic Priorities

The semantic layer benefits every sector where precision matters: Healthcare billing requires semantic understanding of complex medical coding while protecting patient rights and ensuring regulatory compliance. Supply chain negotiations must balance just-in-time delivery, quality specifications, and regulatory requirements as integrated constraints. Financial services demand precision where the difference between “growth-oriented” and “aggressive growth” carries real fiduciary implications.

The semantic layer addresses challenges far beyond echoing. It is infrastructure designed for digital interactions that scale beyond human supervision while maintaining human oversight where it matters most.

But infrastructure alone isn’t sufficient. There are significant opportunities to improve the underlying models specifically for agent-to-agent communications, moving beyond their current optimization for human-agent interactions.

The Agentic Era Leadership Mandate:

Choose your technology partners wisely

The organizations solving semantic protocol challenges today will enable tomorrow’s digital interactions across every enterprise function. Smart leaders recognize this as a foundational technology decision, not an internal development project. The question isn’t whether to participate in agent-to-agent coordination, but which research partners have the depth to solve it correctly—and track record to prove they can lead industry standards forward. .

At Salesforce AI Research, we’re uncovering these foundational elements and working closely with our product teams to integrate them into Agentforce. This type of work builds on our contributions to industry standards—like pioneering the “Agent Cards” concept in partnership with Google’s A2A specification efforts and Model Context Protocol (MCP). What we have today is the A2A protocol itself—establishing uniform communication between internal and connected external agents. This foundational layer enables agents to exchange messages, but doesn’t yet solve the sophisticated negotiation, verification, and trust challenges outlined above.

The semantic layer we’re exploring represents the next phase—moving from basic connectivity to strategic business interactions. Some components are in active development for near-term deployment, while others require broader industry alignment on standards and schemas. The new six-part framework we’ve outlined guides both our immediate product roadmap and our longer-term research agenda, ensuring that as agent-to-agent commerce scales, it does so with the trust and reliability enterprise customers demands.

A2A for B2B: A Future Built on Trust

Remember that shoe return that spiraled into absurdity at the beginning? With the semantic layer frameworks we’re exploring—multi-objective optimization, expert-domain protocols, verification systems, guardrails, structured logging, and escalation rules—that conversation unfolds very differently. Your agent maintains your objective, resists pressure to compromise, and escalates only when legitimately needed. The retailer’s agent negotiates fairly within defined parameters. No confusion about roles, no echoing behavior, no manipulation. And you get your refund—which, let’s be honest, you deserved in the first place because those shoes didn’t fit!

The vision extends far beyond shoe returns: agents coordinating healthcare protocols, managing supply chain disruptions, ensuring financial compliance—delivering the reliability of human judgment at machine scale.

The semantic layer is how we make that vision reality.

Adam, Sarath, and I would like to thank Kathy Baxter (Salesforce VP and Principal Architect for Responsible AI & Technology), Patrick Stokes, Jacob Lehrbaum, Itai Asseo and Karen Semone for their insights and contributions to this article.