How Generative AI is Revolutionizing Application Development

Generative AI is revolutionizing app development with large language models that can not only generate content but also orchestrate code building blocks and solve new problems without ever gathering requirements.

We are at an inflection point in the history of application development. Game-changing innovations in AI are transforming the way we build apps, enabling us to create experiences that were previously unimaginable.

This is reminiscent of the shift from mainframe to client/server applications, and later from client/server to internet applications. In both cases, the new technology was so transformative that it led companies to change course, rethink and often rewrite their existing applications, and build a new class of applications that embraced the new possibilities offered by the advance in technology.

Today, we are at a similar inflection point with AI. Generative AI revolutionizes the way we interact with information, and as a result, it changes the way we think about and build applications. This could be the most transformative shift yet in application development.

Indeed, in addition to their ability to generate content, Large Language Models (LLMs) are capable of reasoning and orchestrating tasks. LLMs are already solving problems that used to require custom applications to be built, or that were just not possible to solve with traditional applications.

However, foundation models, such as OpenAI’s GPT-4 and Google’s BERT, may not always have access to the information they need to perform the tasks that were previously handled by traditional applications. They don’t have access to current data or private data that they were not trained on. For example, they can’t return a list of your top sales opportunities, or available flights from Boston to San Francisco tomorrow. In addition, LLMs don’t have the built-in ability to take action. For example, they can’t update an opportunity record in your customer relationship management software, or book a flight on your behalf.

How to augment LLM capabilities

To augment LLMs’ native capabilities, developers can write actions (also known as plugins or tools) that provide LLMs with the additional information they need, and the ability to execute logic. Actions are building blocks that encapsulate granular functionality: update a customer record, book a flight, post on social media, retrieve a list of orders, approve an expense report, etc.

Get a full view of AI agents

In the previous era, developers built applications by gathering requirements and writing business and orchestration logic to implement those requirements. Using AI for app development, developers write building blocks (actions) that encapsulate granular functionality instead. LLMs are able to reason on and orchestrate these building blocks to provide the desired outcome.

The most transformative and disruptive aspect of this new pattern is that it enables LLMs to perform tasks that were not anticipated and for which no requirements were ever gathered. Imagine providing the LLM with dozens, hundreds, or even thousands of building blocks. The LLM could compose them in a virtually infinite number of ways, including in ways never anticipated, enabling it to solve new problems based on new requirements.

This is an exciting time for app development. But to build the next generation of AI applications securely and successfully, you need a platform that is designed specifically for the AI revolution.

Introducing Salesforce’s platform for the AI era

The Salesforce platform is purpose-built for the AI era. It provides you with all the features you need to build and deploy the most innovative AI applications in a trusted way, with the right guardrails and supervision in place. Let’s walk through the key components.

A diagram of the technical architecture of the Salesforce Platform

The Salesforce platform’s data layer, depicted at the bottom of the image above, comprises Salesforce data and heterogeneous data sources brought together by Salesforce Data Cloud and MuleSoft. It provides high quality data to LLMs, either as part of the prompt or as part of the model’s training dataset.

The platform’s extensive metadata allows LLMs to better understand the context and meaning of the data, which can lead to more accurate responses. For example, an LLM could use metadata to present CRM data to the user in a more useful and actionable way, or to automatically create and update records (with the right user supervision).

The vector database can supplement the use of LLMs when building AI applications. You’ll be able to use it to store information not included in the LLM training datasets, including up-to-date company data and documents. Information is stored as vector embeddings to support semantic search. Data retrieved from the vector database can be used to provide additional context to the LLM, leading to more accurate responses.

Discover Agentforce

Agentforce provides always-on support to employees or customers. Learn how Agentforce can help your company today.

The Salesforce Platform also provides a configurable foundation model architecture that allows you to easily plug in and compose AI models. In many cases, a hosted foundation model provides you with the fastest path to AI innovation, but you can also use fine-tuned foundation models or your own models.

The Einstein Trust Layer lets you use LLMs in a trusted way, without compromising your company data. Here’s how it works:

- Secure gateway: The Salesforce platform accesses models through a secure gateway that enforces security and privacy policies consistently across different model providers.

- Data masking and compliance: Before the request is sent to the model provider, it goes through a number of steps including data masking, which replaces personally identifiable information (PII) data with fake data to ensure data privacy and compliance.

- Zero retention: To further protect your data, Salesforce has zero retention agreements with model providers, which means providers will not persist or further train their models with data sent from Salesforce.

- Demasking, toxicity detection, and audit trail: When the output is received from the model, it goes through another series of steps, including demasking, toxicity detection, and audit trail logging. Demasking restores the real data that was replaced by fake data for privacy. Toxicity detection checks for any harmful or offensive content in the output. Audit trail logging records the entire process for auditing purposes.

AI Orchestration is a critical component of this new stack and the engine that powers the new class of AI applications. Given a user request, the planner (or AI orchestrator) is able to orchestrate granular tasks provided as actions (also known as plugins) to deliver the desired outcome. In other words, it creates a plan describing how to best compose the available actions to complete the request.

Actions are building blocks built by developers and that encapsulate granular functionality. They augment the native capabilities of an LLM. For example, actions can access up-to-date data, perform computational logic, and take action in external systems. Given a set of actions, the planner determines which ones to use and in what order to complete the user request. Actions can be provided in the form of:

- APIs

- Invocable Apex

- Flow

- Prompt templates

The planner can combine these actions in an almost limitless number of ways, including new and unexpected ways, to solve new problems with new requirements.

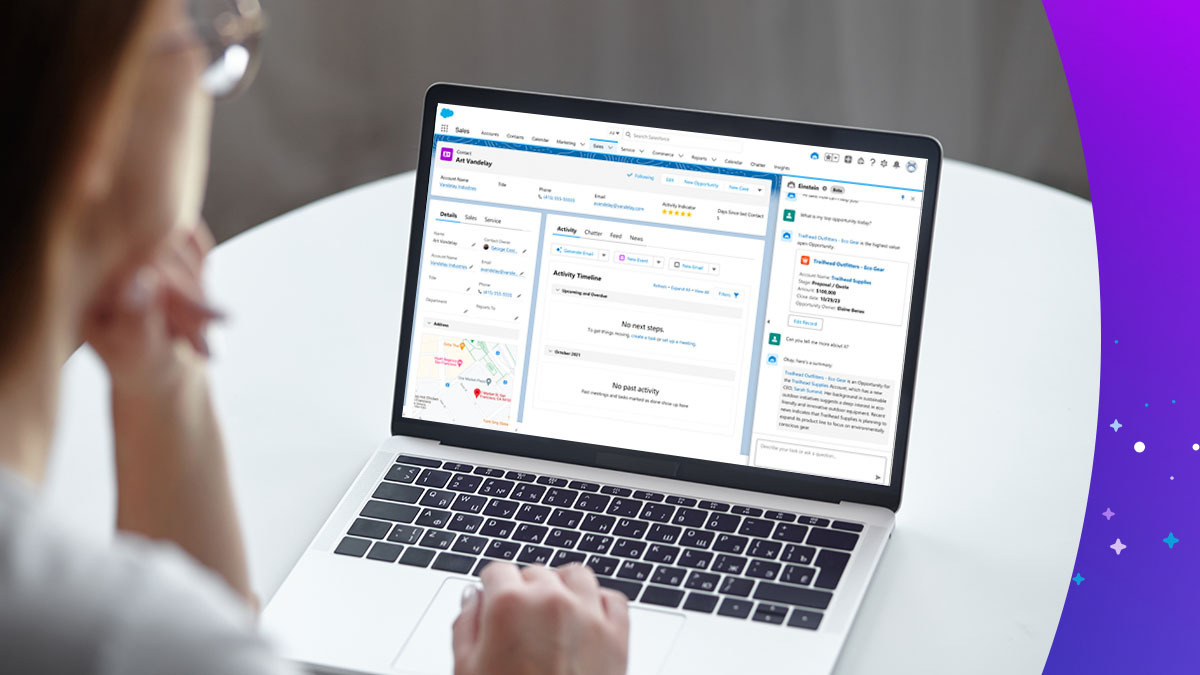

Copilots are the AI applications. You will be able to deploy copilots to different containers: in existing Salesforce applications (Lightning and Salesforce Mobile), in standalone web applications, in Slack, or as bots on Salesforce or other bot platforms. Copilots typically use a conversational interface, but using metadata, they can also present information in more visually interesting or more actionable ways when it makes sense to augment the text representation. Examples: Data visualization, reports, actionable datagrids, etc.

What tools does the Salesforce Platform offer?

The platform provides a combination of low-code and code tools to build this new class of AI applications.

Einstein Copilot Studio is a suite of tools that make it easy to build and configure copilots: it includes Prompt Builder, Action Builder, and Model Builder.

Prompt Builder is a new Salesforce builder that facilitates the creation of prompts. It allows you to create prompt templates in a graphical environment, and ground them with dynamic data made available through record page data, Data Cloud, API calls, flows, and Apex.

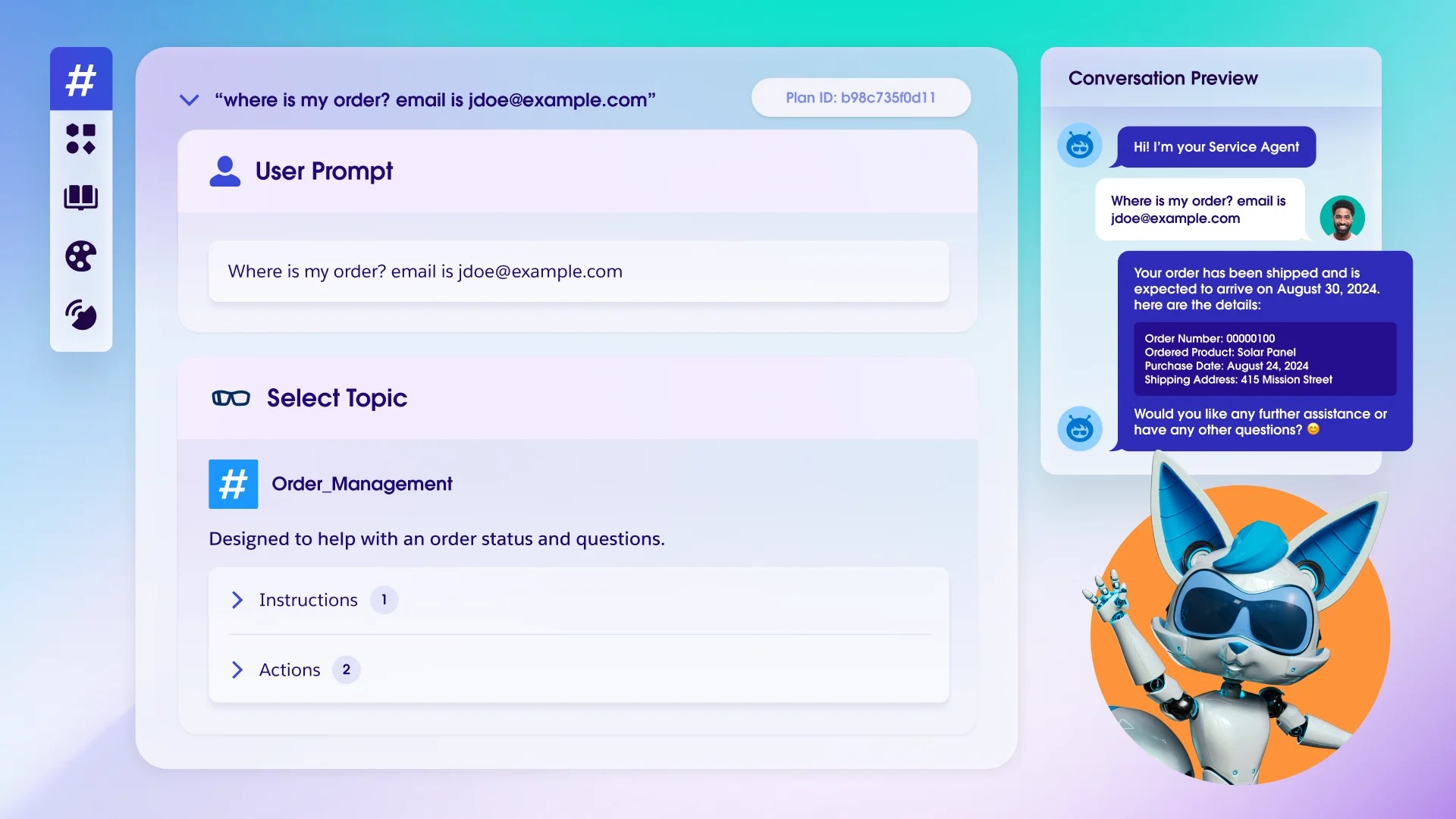

Salesforce Prompt Builder

Action Builder will allow you to create and configure copilots. You can select the models, prompts and actions your copilot will use. You can try your copilot in a playground and deploy it to one of the supported targets.

Salesforce Action Builder

Model Builder will allow you to configure your own AI models.

Einstein for Developers is a suite of AI-powered tools that help developers code more efficiently and accurately, analyze code, and more. It can be used to build new AI applications’ actions or to build traditional applications, with AI assistance in both cases.

Einstein for Developers

The TL;DR on AI for app development

Generative AI is revolutionizing application development. In addition to their ability to generate content, LLMs are capable of reasoning and orchestrating tasks. To augment large language models’ native capabilities, developers can write actions that provide LLMs with the contextual information they need, and the ability to execute logic.

The most transformative and disruptive aspect of this new pattern is that it enables LLMs to perform tasks that were not anticipated and for which no requirements were ever gathered. By composing actions in countless different and unexpected ways, LLMs can adapt to new situations and solve new problems without writing new code. The Salesforce platform is the platform to build this emerging class of applications in the AI era.

Go deeper with Large Action Models (LAMs)

LAMs are a variation on LLMs that can accomplish entire tasks or even participate in workflows. Learn more about how LAMs and LLM orchestration can work for your team.