Akash Gokul

Akash Gokul is an Applied Scientist at Salesforce AI Research. He has been working on LLMs for coding, LLM evaluations, and multimodal models.

Large language model (LLM)-based software engineering (SWE-) agents have recently demonstrated remarkable progress on realistic software engineering tasks such as code review, bug fixing, and repository-level reasoning. Most SWE-agents start from a fresh…

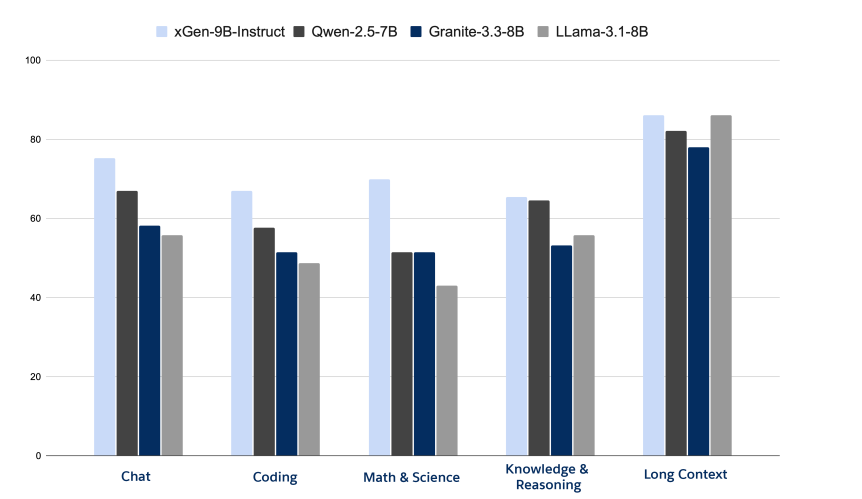

xGen-small is an enterprise-ready compact LM that combines domain-focused data-curation, scalable pre-training, length-extension, instruction fine-tuning, and reinforcement-learning to deliver Enterprise AI with long-context performance at predictable, low cost.

BootPIG: Bootstrapping Zero-shot Personalized Image Generation Capabilities in Pretrained Diffusion Models

We present a novel architecture, BootPIG, that enables personalized image generation without any test-time fine-tuning.

TL;DR: With CodeChain, a pretrained large language model (LLM) can solve challenging coding problems by integrating modularity in generation samples and self-improve by employing a chain of self-revisions on representative sub-modules. CodeChain can…

TL;DR: Text-to-image diffusion models are very adept at generating novel images from a text prompt, but current adaptations of these methods to image editing suffer from a lack of consistency and faithfulness to…