AI agents can do just about anything, but if you’re particular about how they do it, you might run into one of the thorniest obstacles in enterprise AI. AI agents are stochastic, which is a fancy way of saying that some randomness is part of the package. They don’t always do the same thing the same way, whether it’s the phrasing of a response or the order of a complex workflow. Sometimes, that randomness is good. It’s what allows an AI customer service agent, for example, to deftly mimic the flow of human conversation.

But what happens when you have a critical workflow that needs to follow the same sequence and deliver the same result every time? That’s where stochastic AI quickly begins to feel more like a bug than a feature. Sure, you can tune and retune your prompts and write too many instructions full of “always” and “only” conditionals, but each line adds new complexity and performance overhead. And while the agent will follow these instructions 95% of the time, a 5% failure rate for something like order returns is a dealbreaker for most companies.

For agents to be viable, production-grade tools, users need a creativity toggle — a way to take advantage of generative AI’s stochastic elements where they add value, but also to define deterministic logic when reliability and predictability are more important. The good news? A new agent scripting language is poised to tackle this challenge. But before we dive into what’s new, let’s take a look under the hood at how Agentforce’s Atlas Reasoning Engine works.

An opinionated agent graph

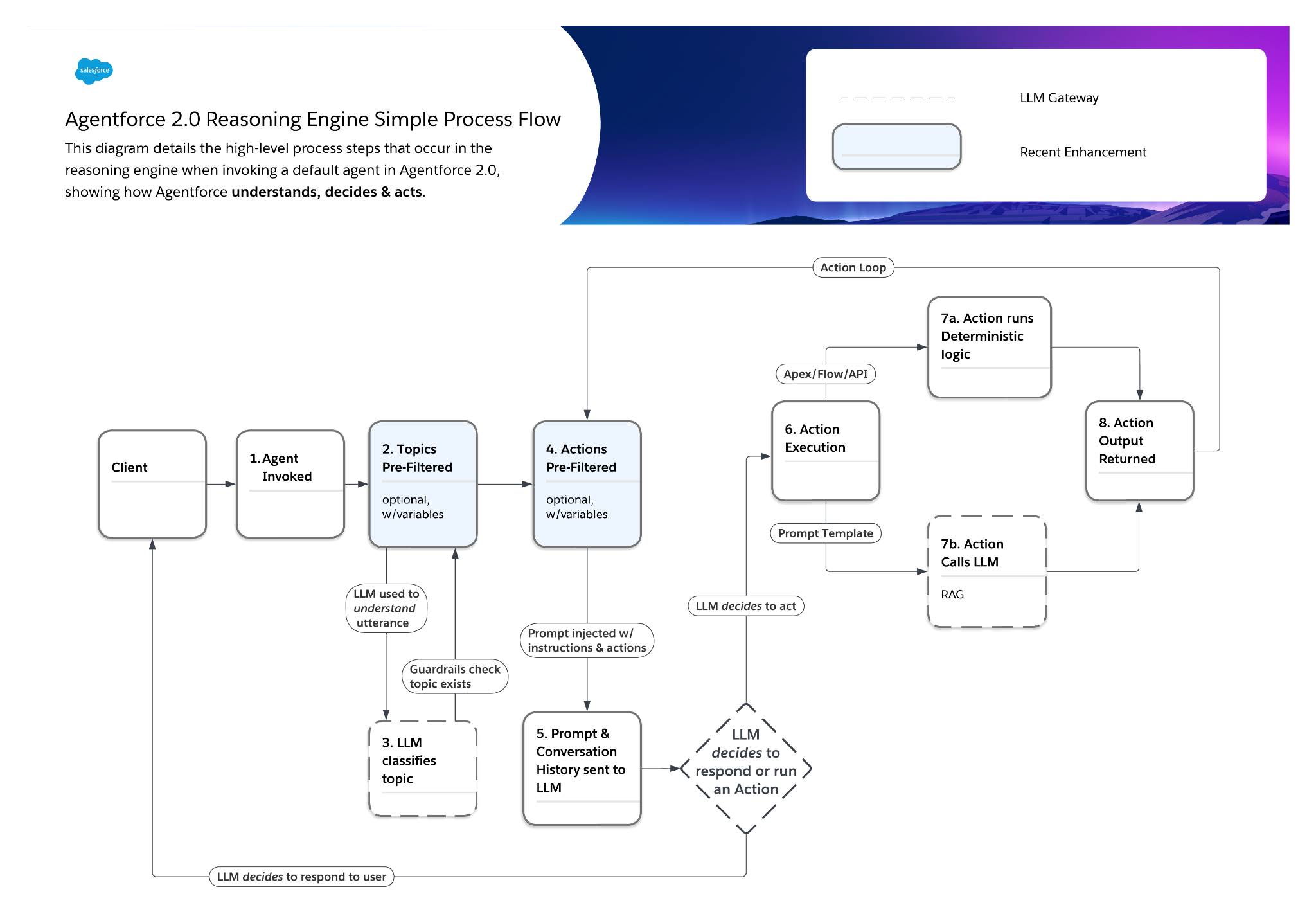

The Atlas reasoning engine is a ReAct-style planner that powers Agentforce. It is the core that’s underpinned every agentic experience we’ve shipped to date.

As you can see in the diagram below, Atlas orchestrates workflows using a locked-down graph. Think of it like a Lego set: while it’s yours to build, the pieces are designed to come together in a predetermined way, and the finished product should look like the picture on the box. This type of “opinionated” graph offers teams a helpful jumpstart and works well across a wide range of use cases, but it can also be restrictive when it comes to complex workflows that need more granular control.

When a user initiates a request (e.g., “I have a problem with my order”), the first thing Atlas does is perform some initial topic filtering based on agent variables (one of the few customizable steps in the workflow). From there, the filtered list of topics gets sent to the LLM, which identifies the topic that most closely matches the user’s intent. This is the “focus topic,” which then guides the rest of the conversation.

Within each focus topic is a list of actions that also need to be filtered. Similar to the previous step, a static filtration rule based on agent variables can be applied before Atlas makes another LLM call to determine the next step. If the LLM returns an action (e.g., “query the order”), that action is executed and the output is integrated into the conversation history. The system then re-evaluates and kicks off the process again, continually asking the LLM for the next step until it has enough information to generate a response for the user or run an action on their behalf.

This model works well across many use cases, but it’s not without its shortcomings. For one, the need for multiple LLM calls to determine the next step creates considerable latency. These calls could be whittled down in many instances without sacrificing output quality, but there’s no way to configure that in the current graph.

Additionally, users today also can’t change how topic classification works or insert logic between classification and topic execution. They also can’t add logic to deterministically call one action after another. This is where prompt tuning often comes in as a stopgap, introducing even more complexity and latency as users attempt to corral agents into behaving a certain way. But this workaround is neither reliable nor performant.

So what if instead, we simply cracked open the agent graph and allowed users to build that logic directly into the agent’s configuration?

Flipping the script

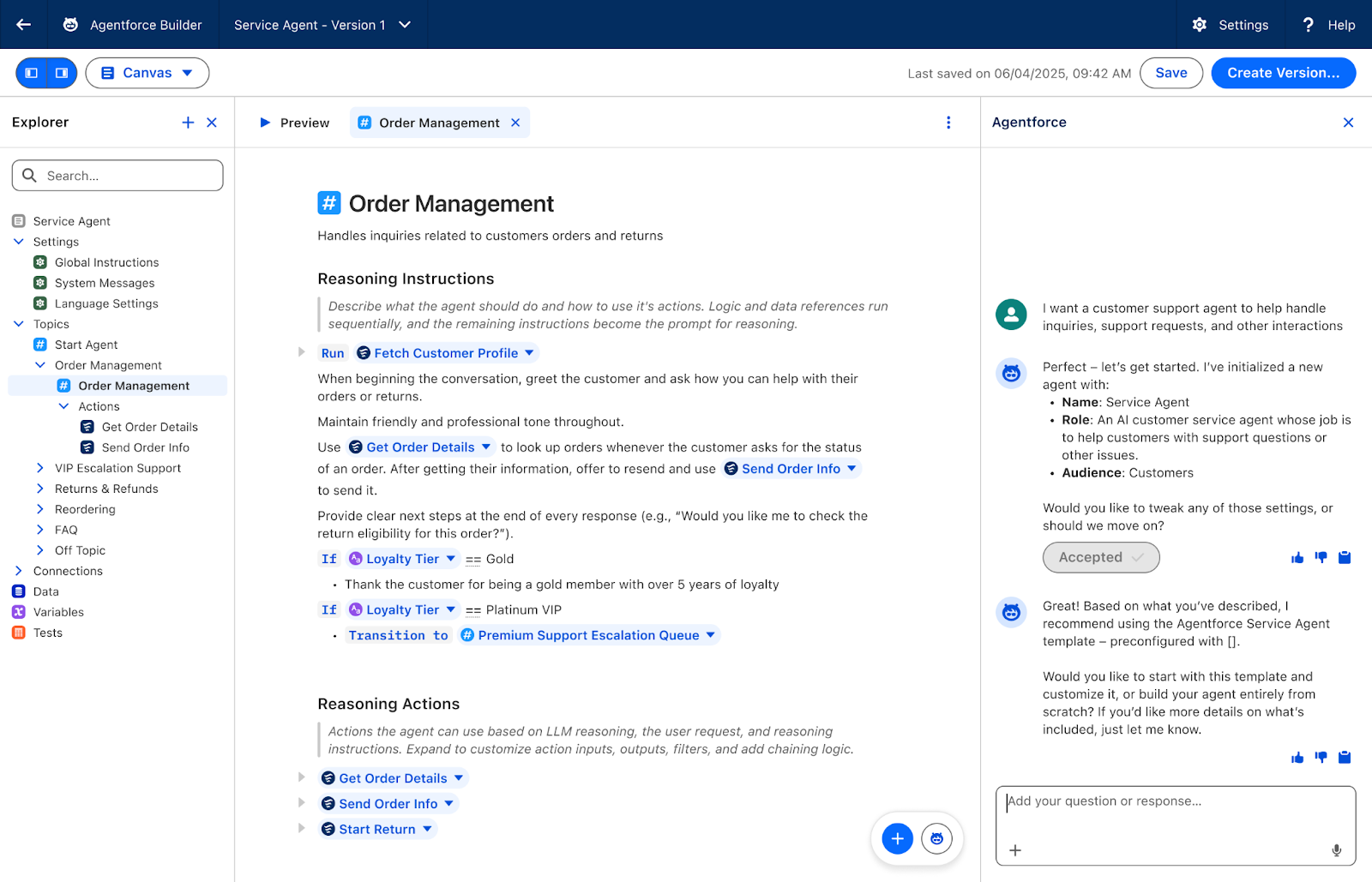

With the next release of Agentforce, we’re introducing a brand new reasoner with a fully configurable graph. Virtually every graph element can be modified and moved around using a new expression scripting language called Agent Script that powers every agent in the new Agentforce Builder. While the reasoner is overflowing with new capabilities — from a more advanced reasoning loop, to reasoning against multiple topics in a single request — the core innovation is the ability to steer your agents deterministically while retaining 100% of their agentic intelligence and creativity.

To do this, Agent Script gives users the ability to directly reference actions and variables within agent instructions, including “if/then” conditionals. This allows agents to dynamically tailor their outputs based on criteria like customer loyalty tier, or even variables collected and populated through actions. All of this can be easily configured in the new Agentforce Builder, with several AI-assisted features designed to help teams hit the ground running on day 1.

Simply type your instructions in natural language into the new Canvas editor (e.g., “Always run account lookup immediately”) and the system will “lint” the input and offer to convert it into Agent Script. You can quickly toggle between the Canvas editor or a more technical Script editor to see the actual syntax in action – and users can make edits in both places.

If you get stuck, you now have contextual AI help with Agentforce as your assistant, with full visibility into your Canvas to answer questions, make suggestions and even help with debugging.

Agent Script also adds new hooks that allow you to run actions, set variables and gather context before the reasoning loop starts. For example, you might use this to check whether a request is coming in during business hours and if not, tell the agent to switch to a different topic.

Once you’re done scripting your agent, your configuration is compiled down into the new graph-based runtime, generating topics, actions and additional metadata. This is ultimately what’s executed by the reasoning engine.

With the combination of Agent Script and the new Agentforce Builder, enterprises for the first time have a deterministic anchor to ensure their agents behave the way they’re expected to. In eliminating the need for constant prompt tuning and empowering users with full control of their graph, agents will rapidly evolve into trusted, reliable partners for even the most mission-critical jobs.

To learn more, click here.