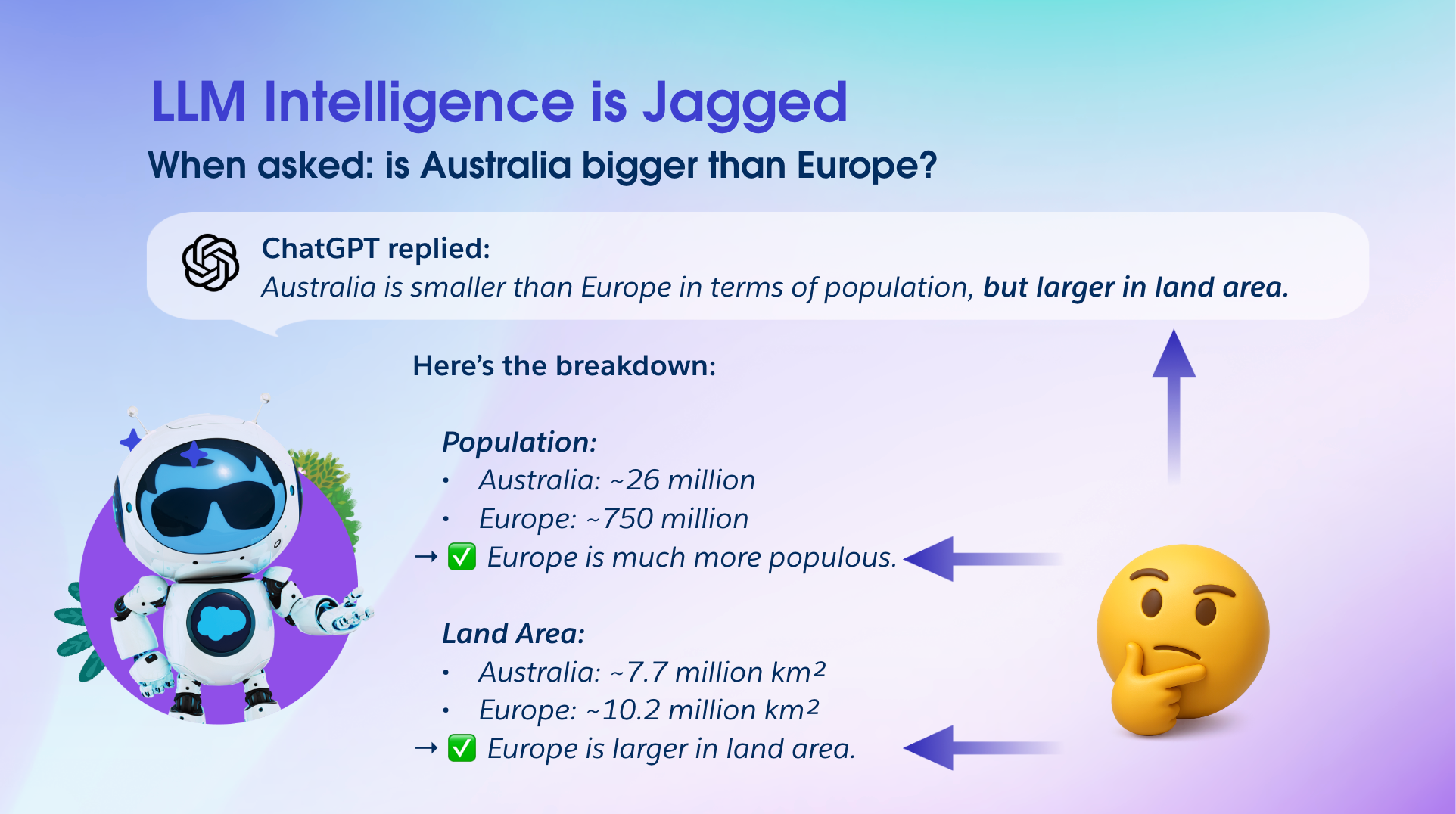

Recently my daughter asked a seemingly simple question over dinner: “Dad, which is bigger, Australia or Europe?”

As any parent today knows, these moments present a choice — attempt an answer from memory or consult the go-to digital authority. As a family, we decided to put ChatGPT to the test.

The response was illuminating in an unexpected way: “Australia is larger in land area,” the AI declared confidently. Then, it provided specific data showing Europe at 10.2 million square kilometers versus Australia’s 7.7 million — numbers that directly contradicted its initial claim.

This wasn’t a minor computational error or a knowledge gap. This was something more fundamental — a window into what researchers call “jagged intelligence,” where AI systems demonstrate remarkable capabilities in complex reasoning while stumbling over seemingly simple tasks. More importantly, it highlighted a critical challenge facing enterprise leaders today: How do you systematically validate AI systems that don’t behave like traditional software?

Deterministic testing in a probabilistic world

The fundamental challenge lies in a mismatch between how we’ve learned to test technology and how modern AI actually works. For decades, software engineering built its reliability foundation on deterministic principles — given identical inputs, you could expect identical outputs. This predictability enabled rigorous testing methodologies: unit tests, integration tests, acceptance criteria, all based on the deep-seated orthodoxy that we can write code, validate outputs, and ship with confidence.

AI agents operate by entirely different principles. They’re probabilistic systems, designed to generate varied responses based on complex pattern recognition and contextual understanding. Give the same prompt to an AI system 10 times, and you might receive ten different responses — some excellent, others adequate, and potentially some that miss the mark entirely. (If interested, you can learn more about this phenomenon here.)

But this isn’t a flaw. It’s a feature. This probabilistic nature enables AI agents to navigate complex, context-dependent scenarios that no programmer could anticipate or explicitly encode — adapting their responses to customer sentiment, business urgency, and situational nuance in real-time. The flexibility that makes AI agents valuable for handling diverse, unpredictable customer interactions also makes them fundamentally challenging to validate using traditional testing approaches.

I was recently discussing this challenge with Walter Harley, our principal AI Research architect and my co-author on this piece. As Walter puts it: “Traditional software is over seventy years old, so we’ve accumulated decades of institutional knowledge about how to test it systematically. We understand the failure modes, the edge and corner cases, the patterns of where bugs hide.”

He continues: “But LLMs are only about three years old as enterprise tools. We’re essentially trying to validate systems using testing intuitions that were built for an entirely different paradigm — and that can be a real problem when businesses are staking their operations on these technologies.”

Walter’s insight hits at the heart of why consumer AI approaches fall short in enterprise contexts. ChatGPT might be perfectly adequate for most consumer use cases—providing movie recommendations, drafting poems, helping with research, or settling family dinner table debates. But when AI agents are handling customer data, processing financial transactions, or representing your brand to millions of customers, the tolerance for “quirks” drops to near zero. The stakes shift from mild inconvenience to potential business catastrophe.

To understand what’s truly at risk when these systems fail, let’s consider how another industry approaches high-stakes AI validation.

Validating when stakes are high: Lessons from autonomous vehicles

Waymo’s approach to autonomous vehicle validation offers a compelling parallel for enterprise AI measurement. Self-driving cars, like AI agents, must perform reliably across countless scenarios, but their validation framework recognizes that not all failures carry equal weight.

Waymo’s research demonstrates impressive safety performance — 88% fewer property damage claims and 92% fewer bodily injury claims compared to human drivers over 25+ million miles. But what’s more relevant here is that their validation approach acknowledges that different types of failures have dramatically different consequences.

At the mildest level are performance variations. Sometimes the car takes a slightly longer route or brakes more conservatively than necessary. The passenger reaches their destination safely, but the experience isn’t optimal. In enterprise AI, this might be analogous to a customer service agent providing a correct but needlessly lengthy response, or a sales agent missing an opportunity to suggest a relevant add-on service.

More concerning are failures that create undesirable outcomes. The car might stop at the wrong address or take a route that adds significant time. Or perhaps they behave overly cautious, for example accelerating much more slowly from an intersection than a typically aggressive driver. The passenger might experience inconvenience or frustration, but no catastrophic harm occurs. For business AI, this could mean providing outdated pricing information, recommending irrelevant products, or failing to escalate a customer concern appropriately. They’re not fatal flaws, but over time these “quirks” would lead to the erosion of trust or customer loyalty.

Most critical are failures that pose genuine danger. When autonomous vehicles stop in the middle of traffic or cause accidents, the consequences become existential. Waymo’s methodical approach to identifying and preventing such scenarios — through millions of miles of testing and continuous safety research — demonstrates the rigorous validation framework required when lives depend on system reliability.

Enterprise AI operates under similarly high stakes, just in different domains. These are the failures that represent existential business risks: AI agents that divulge confidential information, make commitments beyond their authority, or produce harmful content that could damage customer relationships or expose companies to legal liability.

This is why traditional software testing approaches fall short. As Walter explains: “I like to think of it as the ‘Success Rate Trap.’ Enterprises can get fixated on aggregate performance metrics like ‘Our model achieves 97% accuracy on customer service inquiries!’ while completely missing the critical question: what kinds of wrong answers are we getting in that remaining 3%?”

When that small failure rate includes potentially catastrophic business risks, we’re not dealing with minor performance gaps—we need systematic approaches to measuring and validating the AI to mitigate different categories of failure.

So how do we move beyond this Success Rate Trap to build validation frameworks worthy of business-critical AI?

Salesforce’s three-part approach to enterprise AI validation

At Salesforce AI Research, we’ve developed a systematic framework for measuring AI performance that addresses the unique challenges of probabilistic systems operating in business environments. Each of these approaches operates with expert AI researchers firmly at the helm—not simply “in the loop”—ensuring that scientific rigor and domain expertise guides every validation decision.

1. AI-powered judges for evaluation at scale

Enterprise leaders implementing AI at scale need systematic evaluation frameworks that can process thousands of interactions daily. The global AI research community has developed what are commonly known as “judge models”—AI systems specifically designed to evaluate other AI systems’ performance, now used by most industry standard AI leaderboards.

Consider how a Michelin-starred chef evaluates dishes in his restaurant’s kitchen — they don’t just taste and say “good” or “bad,” but explain precisely why: “The seasoning is unbalanced,” “The composition lacks harmony,” or “The presentation feels off-brand for our restaurant.”

That’s exactly what we’ve built with SFR-Judge, a family of AI evaluation or “judge” models that examines thousands of AI responses while explaining its reasoning: why an output might sound off-brand, contain questionable information, or be potentially harmful. Rather than delivering mysterious “black-box” judgments, it’s like having a tireless quality assurance expert providing both the verdict and the “why” behind each decision.

Our team is now advancing this work further, developing judges for more complex tasks such as verifying reasoning capabilities for math, code, and agentic workflows in high-value enterprise use cases.

This approach comes with an important caveat: we’re now using probabilistic systems to evaluate other probabilistic systems. The validation is only as good as our judge models — which is why measuring AI will always require a human to be firmly at the helm.

2. Human expert knowledge extraction

Even with advanced automated evaluation, certain aspects of AI validation demand human expertise that cannot be easily automated. Enterprise best practices require what we term “expert knowledge extraction” frameworks — systematic approaches to capture domain expertise and incorporate it directly into AI training and validation processes.

Rather than simply having administrators configure AI agents through standard interfaces, we’re exploring how seasoned experts across various business domains or sectors — from experienced financial services professionals and healthcare administrators, to sales coaches and customer success managers — can directly influence agent behavior through natural conversation and feedback.

Our collaboration with a healthcare provider institution demonstrates this approach in patient billing support, where expert billing specialists provide nuanced judgment that automated systems cannot replicate. What we learned is that the human-expert layer serves as both training mechanism and validation checkpoint — AI agents seamlessly request guidance from specialists during live patient calls, while these interventions become valuable learning that improves future performance. This hybrid approach reduces patient wait times and specialist workload while maintaining the accuracy and empathy standards essential for healthcare billing.

3. Simulation environments for comprehensive testing

In my recent exploration of synthetic data for enterprise AI training environments, I discussed how AI agents require sophisticated simulation environments to achieve reliable performance — much like F1 drivers training in comprehensive simulators before racing at Monaco. But these training grounds serve a dual purpose: they also provide the testing environments needed for systematic validation.

Our AI Research team has developed CRMArena-Pro, one of the highest fidelity simulation environments for customer service and sales use cases in the industry. This environment generates millions of realistic business scenarios drawn from our deep understanding of enterprise operations — while maintaining Salesforce’s strict privacy standards by using synthetic rather than actual customer data. What sets our approach apart is comprehensive support for voice modalities: simulating lossy phone connections when cell service drops, handling background noise from city buses or subways, and modeling different speaker intonations, languages, and accents.

Because of the probabilistic nature of AI, and because models can’t be broken down and tested unit by unit in the way traditional software can be, AI validation requires orders of magnitude more scenarios. We need systems that can simulate not just standard business interactions, but also edge cases, adversarial inputs, and the countless variations that occur in real-world customer conversations. Our vast libraries of synthetic but realistic interactions stress-test AI agents across countless dimensions, providing the certainty that these systems can handle whatever scenarios might arise in daily operations.

Understanding the reality of AI imperfection

The reality is simple: AI systems aren’t perfect, and their imperfections manifest differently than human failures.

Walter puts it directly: “Any teams deploying agents need to be monitoring their behavior. You really need to understand not only when it breaks, but how exactly it breaks once trained with your data sources.”

When a consumer LLM gets confused about continents, we can laugh it off and look up the answer ourselves. When enterprise AI gets confused about customer data or business rules, the stakes are entirely different. Imagine what would happen if an AI agent provided contradictory loan terms in a single proposal or routing sensitive customer data to unauthorized recipients.

Organizations that deploy AI based on capability demonstrations alone will struggle with these inconsistent results when impressive technology meets unpredictable business reality. But those who recognize these tools as powerful but imperfect — and build appropriate measuring, monitoring and validation frameworks around them — will gain decisive advantages in the AI economy.

What drives our successful framework is establishing clear thresholds for human escalation: when confidence scores drop below defined levels, when agents encounter scenarios outside their training scope, or when business impact exceeds predetermined risk tolerances. These systematic frameworks ensure agents handle routine tasks independently while seamlessly engaging human expertise for high-stakes decisions. Human-AI collaboration at its finest.

We’re not just building better AI agents; we’re developing the methodologies that will define enterprise AI excellence for years to come.

This post is part of our series exploring the components of enterprise AI development. Read our previous post on synthetic data and AI agent training environments, and watch for upcoming deep dives into enterprise data synthesis and advanced training methodologies.

I would like to thank Walter Harley, Jacob Lehrbaum, Patrick Stokes, Itai Asseo and Karen Semone for their insights and contributions to this article.