As a global leader in service management, Salesforce is moving beyond digital support to the physical world. By bridging the gap between enterprise workflows and the real world, we are entering the era of Physical AI, where Agentforce agents can perceive, reason, and take action within our physical spaces to power the Agentic Enterprise.

Today, Salesforce and NVIDIA are expanding their strategic collaboration by demonstrating how to turn autonomous robots into a productive virtual workforce. In our deployment at Salesforce Tower, we have connected Cobalt’s security robots to Agentforce, and are now using NVIDIA’s visual AI to act as the robots’ “eyes.” This allows the robots to see office issues, like a door left open or a security hazard, and automatically trigger a fix through Salesforce, rather than just recording hours of video for a human to review.

Scaling the Agentic Enterprise

For most organizations, physical spaces and digital systems have remained disconnected silos. Historically, human workers have acted as the bridge, manually observing a problem and logging a ticket. This in turn creates a fragmented workflow that results in an average resolution time of over seven minutes for critical security and safety issues.

As we enter the Third Wave of AI and move beyond predictive and generative models into autonomous robotic agents, this collaboration allows companies to finally close that loop. By creating a unified system where robots can “perceive” and AI can “act,” enterprises can: utilize Agentforce to scale digital labor, optimize asset management, and transition from reaction to proactivity.

- Scale Digital Labor: Move Agentforce beyond the screen to handle physical tasks like security sweeps, hazard detection, and inventory audits.

- Transition from Reactive to Proactive: Instead of waiting for a human to report a safety hazard, an autonomous loop identifies and resolves issues, like a missing fire extinguisher or a blocked exit, before they impact the business.

- Optimize Asset Management: Beyond security, this collaboration fuels better space utilization and reduced labor costs through the auto-generation of incident reports and summaries.

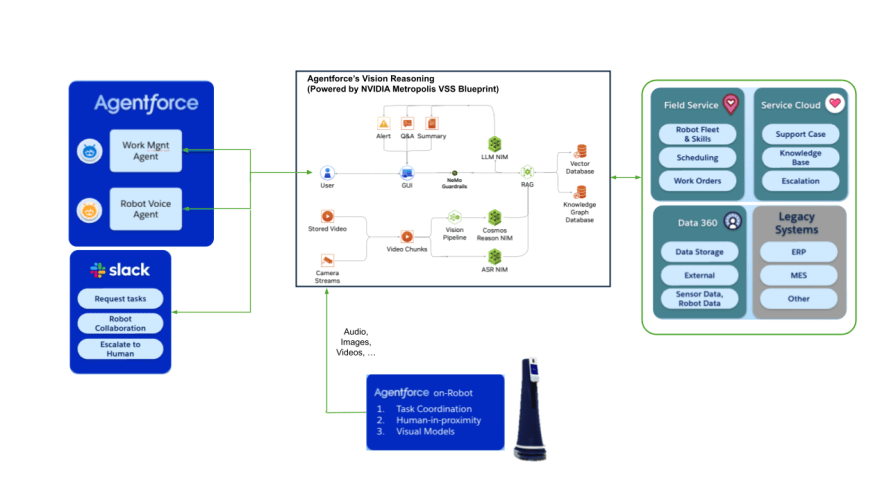

Enhancing Agentforce’s Vision Reasoning with the NVIDIA VSS Blueprint

To be helpful, a robot must first understand its environment. Using the NVIDIA Metropolis Blueprint for video search and summarization (VSS) and NVIDIA Cosmos Reason as the vision language model, Agentforce acts as the “perception engine” for this agentic workforce.

An office robot, like the Cobalt units used in the Salesforce Tower and other buildings, is essentially an AI-powered roving camera. Without AI, the robots generate thousands of hours of unstructured video that a human would have to monitor. By integrating NVIDIA VSS, Agentforce provides developers access to a state-of-the-art VLM that translates video into structured, actionable data in real-time. It filters out the noise and only triggers a signal when it detects a true anomaly—like a coffee spill in a walkway or a secured door left open.

Agentforce as a video analytics AI agent powered by the NVIDIA VSS Blueprint

Image credit: Salesforce, NVIDIA Corporation, and Cobalt AI

The Business Brain: Agentforce

In the robotics AI stack, Agentforce provides “System 2 and 3” intelligence: high-level work coordination across multiple systems and robots. While NVIDIA and robotics providers provide the “Senses” and “Physical Intelligence” (AKA “System 1”) Agentforce provides the business reasoning.

Currently, this intelligence is live for door security issues at Salesforce Tower:

- Enterprise Context: It knows if the area is a high-priority executive corridor and prioritizes the issue accordingly with the right system and personnel.

- Autonomous Orchestration: It automatically checks shift schedules in Field Service, identifies the technician on duty, and dispatches them via Slack.

- Closing the Loop: The robot arrives to troubleshoot and mitigate the concern, even interacting with employees through voice collaboration. If a door cannot be re-secured, the robot “stands post” just like a human guard until it is relieved, while Agentforce updates maintenance logs in real-time.

Coming soon, we are expanding these capabilities into Phase 2: proactive floor audits. This upcoming phase will allow robots to move beyond responding to alarms to proactively identifying safety hazards. Using the NVIDIA VSS pipeline, robots will detect liquid spills, identify missing fire extinguishers, and flag unauthorized materials in secured areas, automatically logging follow-up work orders in Field Service.

Real Outcomes: Efficiency at Salesforce Tower

Salesforce is already utilizing this technology in our own buildings, relentlessly prototyping and testing our own products internally. By integrating Cobalt robots with Agentforce and NVIDIA AI infrastructure, we have seen massive efficiency gains at Salesforce Tower:

- 2x Faster Resolution: Resolution time for door security issues dropped from 3m 38s to just 1m 32s.

- 100x Fewer Human Interventions: Previously, 100% of events required guard intervention. Now, only 1% are escalated to a person.

- Material Guard Time Savings: SF Tower alone saves 40 hours per week, with a global potential of 6,000 hours saved per month, so guards can now focus on even more demanding tasks.

Robotforce, Customer Zero for Salesforce Agentforce Robotics Orchestration in the Salesforce Tower

Easy Onboarding: From “Sim” to “Day One” Readiness

The ultimate goal of this collaboration is Generalization—moving from specialized security tasks to robots that are “business savvy” across any industry, from healthcare support to retail inventory audits.

The next phase involves “Sim-to-Real” training. Before a robot steps foot on a physical floor, it can be onboarded in a high-fidelity Digital Twin—a virtual simulation of the real world. By using Agentforce to orchestrate these virtual environments, anyone can train robots like Boston Dynamics’ Spot 4-legged robots in our office on new skills and edge cases safely and at scale.

In a traditional setup, onboarding a robot requires physical downtime for mapping and manual testing. By utilizing high-fidelity digital twins, we have redefined this process. We can simulate millions of scenarios, from blocked fire exits to intruder alerts, in a physically accurate virtual world, training navigation models safely off-line.

NVIDIA Ominverse Robot Onboarding and Mission Control powered by Salesforce Agentforce

We also simulate the workflow logic. Once a robot perfects a task in a simulation, we push that trained model to the physical Cobalt or Spot robot while simultaneously updating the Agentforce logic.

The robot arrives on site not as a blank slate, but as a seasoned employee that recognizes facility-specific hazards and is pre-synced with Salesforce workflows. By becoming a service resource in the Salesforce scheduler, the robot is integrated into the team to work alongside humans more effectively from the moment it powers on.

By bridging the physical and digital worlds, Salesforce’s Agentforce and NVIDIA are ensuring that the future of work isn’t just automated—it’s agentic.

Learn More

- Dive into the details: Read the original release on how Salesforce and NVIDIA are delivering AI agentic capabilities to the enterprise.

- Explore the evolution of AI: See how we are moving from generative AI into the “Third Wave” of autonomous robotic agents .

- See Agentforce in action: Discover how Agentforce empowers every company to build a virtual workforce of agents to handle tasks autonomously .

- Scale your field operations: Learn how Salesforce Field Service connects your workforce, including your robotic ones, to the back office.

- Learn More about Cobalt AI: Learn how Cobalt AI transforms security, insights, training, and best practices