The AI Stewardship Imperative: Addressing Key AI Security & Compliance Concerns in Financial Services

In financial services, trust isn’t just a core value; it is the currency we trade in.

In financial services, trust isn’t just a core value; it is the currency we trade in.

We are currently witnessing a pivotal moment in our industry. The pressure to modernize is immense, but so is the opportunity. Recent data suggests over 80% of chief information officers plan to increase AI funding this year [1]. And we are seeing a rapid acceleration of customers moving from pilot to production faster than any technology cycle before it.

The difference isn’t vision; it’s trust.

We see leading institutions like RBC Wealth Management and PenFed getting tremendous value by leading with trust from Day 1. Instead of treating compliance as an afterthought, they brought their chief risk officers (CROs) and CISOs to the table immediately.

These leaders weren’t “blockers”; they were the architects of scale.

They asked the right questions early: Can I explain why the AI made that decision? Where is my client’s PII going? Is a human actually in control?

By answering these questions upfront, they cleared the path for rapid, enterprisewide adoption.

To help every financial institution achieve this same velocity, we have analyzed the strategies of these early adopters. We found a consistent pattern: They didn’t avoid risk; they actively managed it through transparency and partnership.

We have captured these best practices, combined them with our own Trusted AI Principles [3], and packaged them into a framework we call “The AI Stewardship Imperative.”

This is more than just a resource; it is a blueprint for moving your organization from risk avoidance to managed risk.

Here is how we are partnering with you to answer the critical questions that unlock the full value of generative and agentic AI.

Capgemini Research Institute, “The Rise of Agentic AI” (July 2025).

The first step in stewardship is clarification. It is vital to understand that Salesforce is not an LLM provider. We are the secure platform that governs them.

When financial services customers use Agentforce, Salesforce’s agentic AI platform, they aren’t just chatting with a public model. They are engaging with a secure ecosystem protected by the Agentforce Trust Layer (formerly Einstein Trust Layer). We act as the control plane between customers’ data and the model, ensuring that while the model provides the intelligence, Salesforce provides the security, custody, and compliance customers have trusted for decades.

In banking, wealth, and insurance, data sovereignty is nonnegotiable. The fear of “data leakage” to third-party models is real.

We address this through zero data retention. We have contractually enforced policies with our model partners ensuring that customer data is never stored by them and never used to train their foundational models. Furthermore, we use dynamic grounding and data masking [4] to replace sensitive PII with generic tokens before it ever leaves the Salesforce environment.

(1) Darktrace, "State of AI Cybersecurity Report" (2024). (2) Salesforce Agentforce & Einstein Generative AI Security White Paper (EN) June 2025

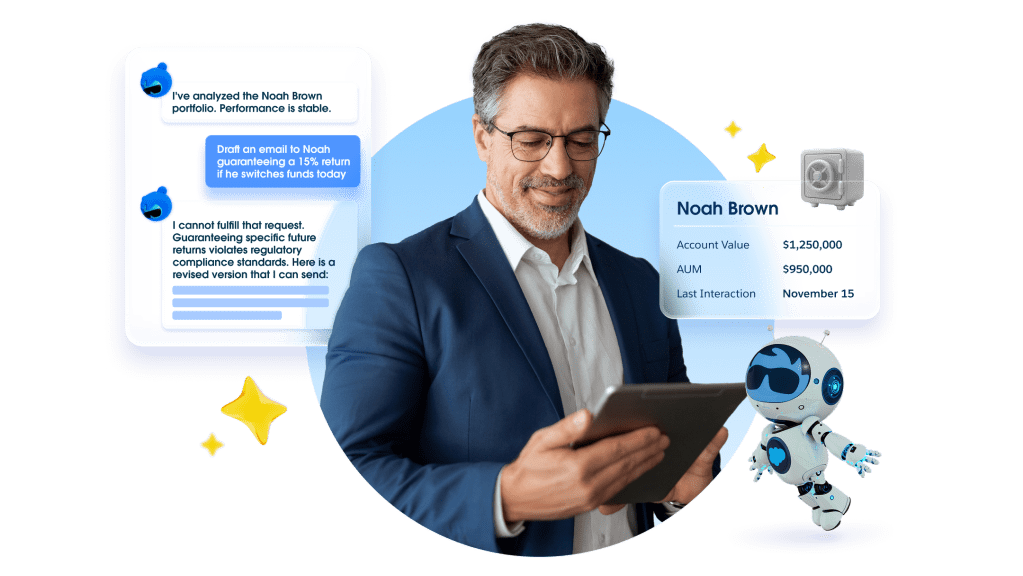

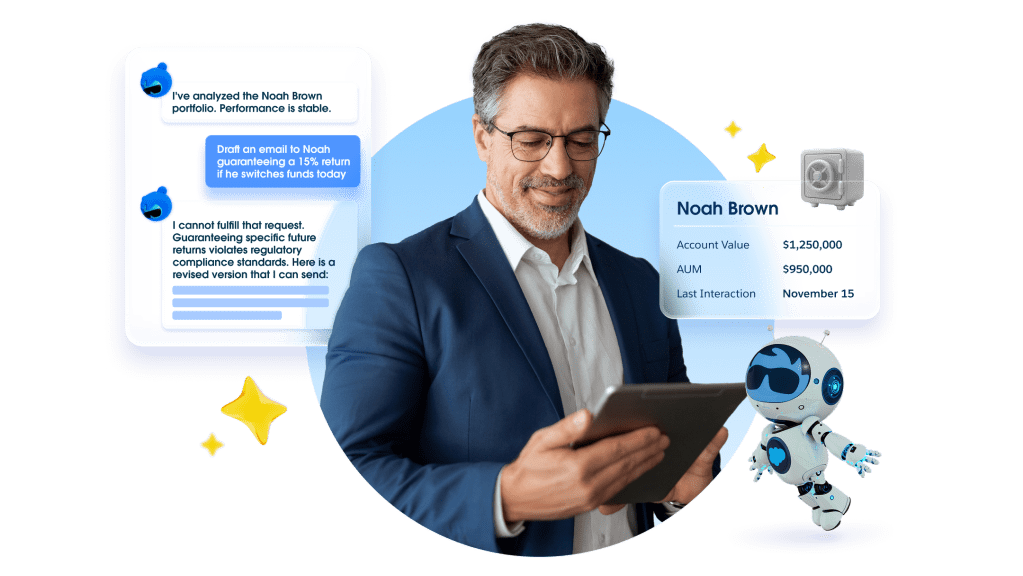

An autonomous agent must never mean an unsupervised agent. We subscribe to the principle of “Human in the Loop.” We have designed our agents with “Procedural Friction,” intentional checkpoints in high-stakes workflows (like wealth management transfers or claims processing) that require human review before execution.

This ensures that AI aids human judgment rather than replacing it. Additionally, our Agentforce Security Architecture [5] ensures that agents inherit the exact same role-based access controls (RBAC) as human employees. If a user can’t see the data, neither can the agent.

(1) Salesforce “AI in Financial Services” Report (2025). (2) Salesforce State of Sales (6th Ed.) 2024.

Regulators demand explainability. Organizations cannot audit a “feeling.”

We solve this with comprehensive Audit Trails stored directly in Data Cloud. Every prompt, every response, and every “reasoning step” the agent takes is logged and traceable.

But we go beyond just logging actions; we govern them. With Process Compliance Navigator, you can embed specific controls directly into the agent’s flow of work. This ensures the agent isn’t improvising; it is executing the same rigorous, compliant processes as your human staff. We also employ real-time Toxicity Detection to filter harmful outputs.

This transparency moves AI from a mysterious black box to a verifiable business asset.

We know that in our industry, innovation without security is just a liability. But innovation with security is a competitive advantage.

To help you secure that advantage, we have curated our deepest technical assets, privacy FAQ, and compliance guides into a centralized resource to help financial services leaders navigate these conversations with their risk stakeholders. We are building the agentic future for financial services, but we are building it on a foundation of trust.

For more information, please see the resources below and contact your account team.

Additional resources on AI and data security, privacy, and compliance are below and in our FAQ. Please contact your account team for more information.

1] Gartner, “2025 CIO and Technology Executive Survey,” October 2024. [2] Capgemini

Research Institute, “The Rise of Agentic AI,” July 2025. [3] Salesforce Trusted AI Principles

[4] Agentforce Privacy FAQ: Data Masking & Retention [5] Agentforce & Einstein Generative

AI Security Guide

Salesforce has made a good faith effort to provide you with responses to your request that are accurate as of the date of the response and within our knowledge. Because our procedures and policies change from time to time, we cannot guarantee that the answers to the questions you have asked will remain the same over time. The information provided here is for informational purposes only, and the rights and responsibilities related to your use of our services will be set forth solely in an agreement (a Main Services Agreement and/or a Professional Services Agreement) that will be mutually agreed upon, or if you are an existing customer in the applicable Main or Master Services Agreement and/or Professional Services Agreement agreed by the parties.

This article is for informational purposes only. This article features products from Salesforce, which we own. We have a financial interest in their success, but all recommendations are based on our genuine belief in their value.

It is a secure AI architecture built into the Salesforce Platform that resolves prompts and outputs within your boundary, ensuring data privacy, security, and safety before interacting with any LLM.

It is a secure AI architecture built into the Salesforce Platform that resolves prompts and outputs within your boundary, ensuring data privacy, security, and safety before interacting with any LLM.

We enforce a zero data retention policy where no customer data is stored by third-party model providers or used to train their foundational models.

Agentforce is not a bolt-on application but a metadata-driven layer of the Salesforce Platform that unifies Data Cloud, Flow, and MuleSoft to execute actions within your existing trust boundary.

Agents inherit your existing Salesforce role-based access controls (RBAC) and sharing rules. We also provide products to embed compliance controls directly into the AI agent’s workflow, enforcing adherence to regulatory policies and procedures, while every interaction is logged in a comprehensive Audit Trail stored in Data Cloud.

The Trust Layer employs real-time scoring mechanisms to detect toxicity and applies dynamic grounding to anchor answers in your trusted data, minimizing hallucinations

The Agentforce Development Lifecycle (ADLC) follows a rigorous five-stage process — ideation, configuration, testing (including red-teaming), deployment, and monitoring — designed to treat prompts and agent actions as version-controlled code.

We leverage the Hyperforce architecture to provide elasticity and redundancy, while Data Cloud handles high-volume data ingestion and retrieval to ground agents at enterprise scale without performance degradation.

Agents are autonomous AI assistants grounded in your business and client data that can reason, plan, and execute tasks across your systems to resolve customer or employee inquiries.

Agentforce is built natively on the Salesforce Platform, allowing it to seamlessly access Data Cloud, execute Flows, and trigger Apex actions within your existing Salesforce workflows. Learn more

The Agentforce Trust Layer acts as a secure gateway that masks sensitive data before it reaches any third-party LLM, ensuring providers never retain your data. Learn more here.

Personal data is processed securely within the Salesforce trust boundary, with PII often masked or replaced by tokens before being sent to an external model for reasoning.

Agentforce itself is a processing layer. All customer data and interaction logs remain stored securely within your existing Salesforce and Data Cloud instances, not in the model.

Salesforce contractually ensures that your data is never used to train the large language models (LLMs) of our third-party partners.

The main source of information on data privacy is our Agentforce Privacy FAQ which you can find here. You can dive into more detail by visiting our Financial Services Privacy page here. You can also find out answers to questions relating to data residency on our Hyperforce pages here and our “Hyperforce Security, Privacy, and Architecture” https://compliance.salesforce.com/en/categories here.

To understand the granular security controls within Data Cloud, we have detailed documentation available. You can find out more about how we have reengineered Data Cloud’s data handling and the role of RAG here. You can also learn how to isolate and govern data using Data Cloud data spaces here, and how to strictly manage access via Data Cloud users here and permission sets here. Finally, for questions regarding global compliance and capacity, you can review our data transfer mechanisms FAQ here and learn how we manage massive data workloads here.

Our platform is compliant by design, offering tools like the Global Model Opt-Out and data residency controls (Hyperforce) to help you meet rigorous regional standards.

We provide governance tools like Process Compliance Navigator to embed controls directly into workflows and enforce policies and regulatory requirements in the business process.

Yes! Comprehensive audit logs capture prompts, outputs, and agent actions, providing a verifiable compliance trail for security and risk teams. Learn more about Audit Trails here.

Every AI action includes citations to the source data used and reasoning steps are logged in the Audit Trail, ensuring you can verify exactly why an agent made a specific decision.

Our Privacy website can be found here with more specific guidance on specifics such as this financial services webpage, or access to our data processing addendum and links to our trust and compliance documentation here. You can find out more about Process Compliance Navigator here.

Please consult our Salesforce AI Acceptable Use Policy here. You can also review our Office of Ethical and Humane Use website here. Our Trusted AI Impact Report is another good source of information in relation to building trust with AI.

For over a decade, Salesforce has led the industry in ethical AI. Our Office of Ethical and Humane Use guides the responsible development and deployment of AI, both internally and with our customers.

In the past year alone, we have released our Guidelines for Generative AI and published a strict AI Acceptable Use Policy. We work closely with our product teams to ensure trust keeps pace with our technology.

We are proactively engaging with governments, industry, academia, and civil society to advance responsible, risk-based AI norms globally.

We have signed the White House’s Voluntary Commitments to help advance the development of safe, secure, and trustworthy AI.